[ad_1]

This free tool analyzes documents of arbitrary lengths, looking for information about a question or instruction provided by the user, and putting together a summary output. A project that exemplifies prompt engineering and prompt chaining and the power of modern language models.

Summarizing long texts or scanning them for pieces of information can be a tedious task, especially when you’re pressed for time. And certainly very hard when you are looking for a very specific piece of information in a huge document that might contain the answer but in an obfuscated form or in a short sentence lost inside a large paragraph… or that might just not be there at all!

What if you could automate the process of scanning a document to extract relevant part, summarize it, or answer highly specific questions? That’s exactly what my free web app called Summarizer Almighty, the result of this project, can do!

Try out Summarizer Almighty and treat yourself to a productivity boost by using the app for free in the link provided in the article. And learn about this interesting project and about concepts such as prompt engineering and prompt chaining right from the next paragraph.

Summarizer Almighty uses the GPT-3.5-turbo language model, which is the same model that powers ChatGPT, to analyze long documents and extract relevant information based on the user’s input, which can be, for example, a question or an instruction. In this article, we’ll take a closer look at how Summarizer Almighty works, which entails some prompt engineering and prompt chaining, and we’ll look at how it can be applied to different use cases.

Wonder what prompt engineering and prompt chaining are?

– Prompt chaining refers to the practice of breaking down a complex question or task into smaller, more manageable prompts that can be fed into a language model sequentially to generate a final output.

– Meanwhile, prompt engineering refers to the process of carefully crafting prompts to guide the language model towards generating the desired output.

Summarizer Almighty is built in such a way that it can process very long texts, actually without any limits -contrary to tools like ChatGPT, which are designed to process all text at once, and hence has limits. Besides, I crafted into Summarizer Almighty some carefully engineered prompts that first verify if each section of the input document contains information about the questions or instructions provided by the user, and then creates partial answers from relevant sections. This way, we reduce the probability of getting wrong or made-up answers, which is another major drawback of tools like ChatGPT.

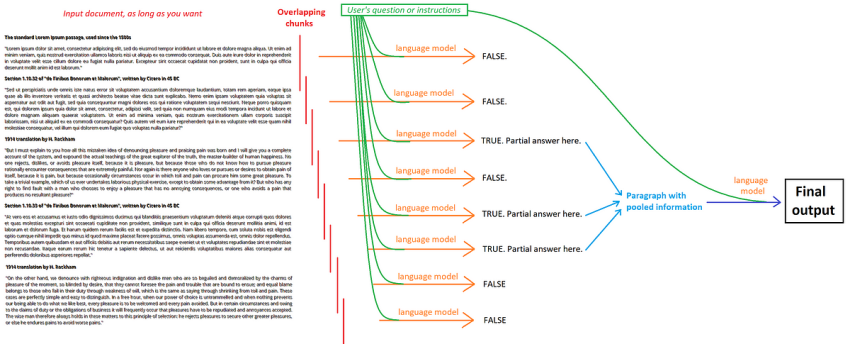

At the core of the code, a function takes the user’s input document and splits it into overlapping chunks, which are then analyzed and summarized separately. The analysis consists in first checking, through a call to GPT-3.5-turbo with a special prompt, if each chunk of text contains information about the question asked or can be processed with the instructions provided. Partial answers are accumulated into a paragraph string that condenses all the outputs, and then this paragraph is processed via one final call to GPT-3.5-turbo in order to produce the final answer. The following scheme overviews this algorithm:

The GPT-3.5-turbo model is a powerful language model that has been trained on a massive amount of text data. By using this model, Summarizer Almighty can quickly scan long texts, “understanding” what they say, automatically connect related words, go through code, translate pieces in different languages, etc., and thus efficiently extract relevant information at high confidence. In the calls that first check if the chunk of text contains information, the app sends to the language model a prompt of this format:

Can you reply to “[question]” considering the following paragraph? If not, reply ‘FALSE’. If yes, reply ‘TRUE’ followed by the answer. Here’s the paragraph: “[chunk of text extracted from the document, optimized to around 3000 tokens]”

It turns out that GPT-3.5-turbo excels at this, and in the hundreds of calls, I have so far tried I always saw either TRUE or FALSE as the beginning of the text completion. Thus, I can safely rely on the language model for the first classification.

By extracting the text that follows each TRUE assignment, a paragraph of partial responses grows up until all chunks of text have been processed.

Finally, one last call to the language model combines the set of partial answers into a single prompt that ends up with the question or instruction provided by the user, from which the final answer is generated. Notice that in case, the app displays not just the last answer but all the partial answers in between.

In Summarizer Almighty, all calls to GPT-3.5-turbo are executed as I described earlier:

Let me stress the importance of the prompt I engineered to focus the language model’s attention on specific parts of the text that are relevant to the user’s input. Without this, we’d risk getting made-up answers, incorrect information, etc.

Summarizer Almighty can be useful for a wide range of applications. Here are a few examples that come up to my mind, mainly based on my own use cases:

- Research: The tool can be used by researchers to quickly analyze long articles and research papers looking for details. Instead of reading through the entire text, one can use the app to extract key information and insights or simply to look for a very specific detail of the text. I am a researcher myself, and I’m using it a lot for this!

- News writing: Summarizer Almighty can be used by journalists to quickly summarize news articles. This can be particularly useful for breaking news stories where time is of the essence. In my case, I’m using this to condense articles into their key components, from which I can then write simpler forms and put together science communication articles more efficiently.

- Education: A special case of the above, but focused on explaining concepts. I think such a tool could help students save a lot of time and focus on the most important, or new, or specific information of a text; maybe not much for lower levels, but certainly in higher education.

- Business: I’m not into this, but I guess a tool like this could be used by businesses to analyze reports and documents efficiently, to make decisions informed by details that might not be easy to find.

- Other situations where time is scarce but details are important!

Here are some concrete examples:

Example 1: Scanning a long text, here a podcast, looking for some very specific information.

Example 2: Summarizing a long text and then asking a specific question about it.

Oh, and by the way, if you want to know more about moleculARweb mentioned in that document, check out this blog post I wrote:

Example 3: simplifying a scientific text from over 5400 words to a short paragraph in lay terms, in less than a minute.

My web app is free to use and can be accessed from any device with an internet connection just by following this link on your web browser. If you like the app or project or find it useful, you’re invited to tip me here.

Note that you need an API key from OpenAI, that you can get here, to use the app. It will spend some cents for every thousand words, as the GPT-3.5-turbo model is very cheap.

Whether you’re a researcher, journalist, student, business, or simply a person with too much to read in scarce time, I think Summarizer Almighty can help you get what you’re looking for quickly and easily. Indeed, this project was born from the request of a client who wanted to quickly summarize and explore the contents of long podcasts without having to hear or read them in full -one of the examples I showed above!

[ad_2]

Source link