[ad_1]

The growth of synthetic intelligence (AI) in modern yrs is closely linked to how considerably superior human lives have grow to be due to AI’s skill to conduct work more rapidly and with less energy. Nowadays, there are hardly any fields that do not make use of AI. For occasion, AI is all over the place, from AI agents in voice assistants this kind of as Amazon Echo and Google Home to making use of device studying algorithms in predicting protein composition. So, it is realistic to imagine that a human functioning with an AI method will produce choices that are remarkable to each and every performing on your own. Is that essentially the scenario, nevertheless?

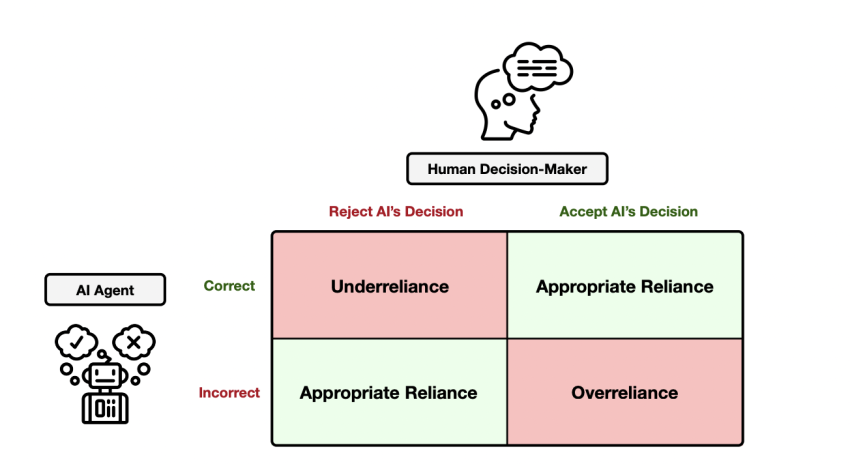

Previous experiments have demonstrated that this is not constantly the case. In a number of circumstances, AI does not always make the proper response, and these techniques need to be qualified yet again to correct biases or any other issues. Having said that, a different linked phenomenon that poses a risk to the effectiveness of human-AI selection-building teams is AI overreliance, which establishes that persons are motivated by AI and usually accept incorrect conclusions devoid of verifying regardless of whether the AI is suitable. This can be pretty dangerous when conducting crucial and critical duties like identifying lender fraud and offering healthcare diagnoses. Scientists have also revealed that explainable AI, which is when an AI product explains at every action why it took a certain decision as a substitute of just supplying predictions, does not minimize this issue of AI overreliance. Some scientists have even claimed that cognitive biases or uncalibrated have confidence in are the root bring about of overreliance, attributing overreliance to the inescapable nature of human cognition.

But, these results do not solely confirm the strategy that AI explanations really should lower overreliance. To even more check out this, a team of researchers at Stanford University’s Human-Centered Synthetic Intelligence (HAI) lab asserted that individuals strategically pick no matter whether or not to have interaction with an AI clarification, demonstrating that there are scenarios in which AI explanations can support individuals grow to be considerably less overly reliant. According to their paper, individuals are much less likely to rely on AI predictions when the linked AI explanations are less complicated to recognize than the exercise at hand and when there is a more substantial benefit to carrying out so (which can be in the kind of a economic reward). They also shown that overreliance on AI could be significantly lowered when we concentrate on engaging individuals with the clarification fairly than just having the concentrate on provide it.

The workforce formalized this tactical final decision in a expense-gain framework to put their idea to the test. In this framework, the expenditures and added benefits of actively taking part in the undertaking are in contrast from the expenses and positive aspects of relying on AI. They urged on the web crowdworkers to work with an AI to resolve a maze obstacle at three distinct levels of complexity. The corresponding AI product available the reply and either no rationalization or one particular of quite a few levels of justification, ranging from a one instruction for the adhering to stage to convert-by-transform instructions for exiting the entire maze. The success of the trials confirmed that expenditures, this sort of as process problem and explanation problems, and advantages, such as monetary payment, considerably motivated overreliance. Overreliance was not at all lowered for complicated duties in which the AI product supplied action-by-stage directions mainly because deciphering the generated explanations was just as tough as clearing the maze by itself. Additionally, the the greater part of justifications experienced no impression on overreliance when it was basic to escape the maze on one’s personal.

The staff concluded that if the get the job done at hand is challenging and the affiliated explanations are very clear, they can assistance stop overreliance. But, when the do the job and the explanations are both equally tough or easy, these explanations have very little impact on overreliance. Explanations do not make a difference significantly if the functions are uncomplicated to do since people today can execute the activity on their own just as readily instead than depending on explanations to make conclusions. Also, when work are complex, individuals have two alternatives: both total the job manually or look at the produced AI explanations, which are commonly just as complicated. The primary result in of this is that few explainability tools are accessible to AI researchers that want a great deal fewer effort and hard work to confirm than doing the job manually. So, it is not stunning that individuals are likely to have confidence in the AI’s judgment with out questioning it or seeking an explanation.

As an added experiment, the scientists also introduced the side of financial benefit into the equation. They available crowdworkers the solution of operating independently through mazes of various levels of difficulty for a sum of funds or using much less cash in trade for support from an AI, possibly with no clarification or with intricate turn-by-convert instructions. The results confirmed that personnel benefit AI support extra when the undertaking is tough and favor a uncomplicated clarification to a advanced one. Also, it was uncovered that overreliance lowers as the extended-time period edge of utilizing AI increases (in this example, the fiscal reward).

The Stanford researchers have significant hopes that their discovery will supply some solace to lecturers who have been perplexed by the truth that explanations really don’t lessen overreliance. Additionally, they desire to encourage explainable AI researchers with their function by delivering them with a persuasive argument for enhancing and streamlining AI explanations.

Check out the Paper and Stanford Post. All Credit For This Research Goes To the Researchers on This Undertaking. Also, don’t overlook to join our 16k+ ML SubReddit, Discord Channel, and Electronic mail E-newsletter, exactly where we share the hottest AI analysis information, neat AI initiatives, and much more.

Khushboo Gupta is a consulting intern at MarktechPost. She is presently pursuing her B.Tech from the Indian Institute of Technological know-how(IIT), Goa. She is passionate about the fields of Device Studying, Purely natural Language Processing and Website Enhancement. She enjoys mastering much more about the technical field by collaborating in numerous troubles.

[ad_2]

Resource connection