[ad_1]

AMD is coming for Nvidia’s AI crown in a big way with the launch of its new Intuition processor, which it promises can do the operate of various GPUs.

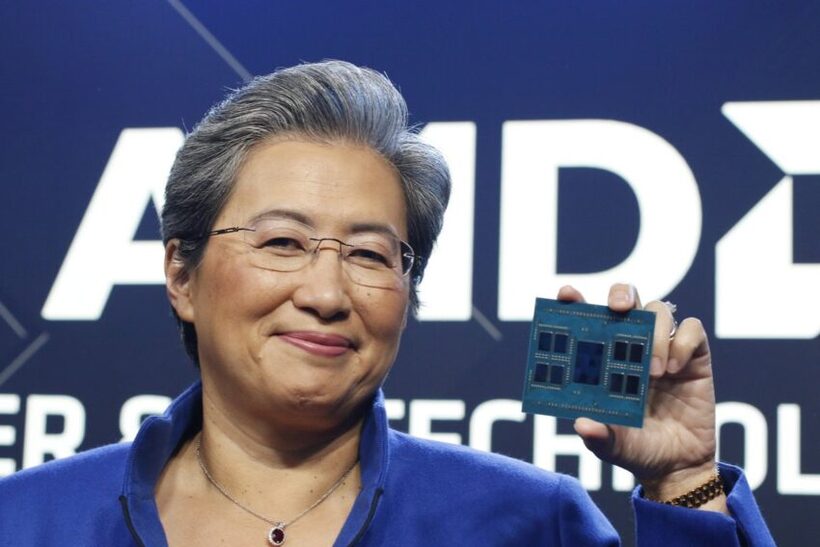

CEO Lisa Su named the Instinct MI300X “the most intricate point we’ve at any time created.” She held up the chip, which is about the size of a consume coaster, at an party on Tuesday in San Francisco.

Weighing in at 146 billion transistors, the MI300X comes with up to 192GB of superior-bandwidth HBM3 memory shared by each the CPU and GPU. It has a full of 13 chiplets on the die. The chip also has a memory bandwidth of 5.2 TB/s, which is 60% more quickly than Nvidia’s H100.

The chip is composed of Zen CPU cores and AMD’s upcoming-generation CDNA 3 GPU architecture. The enormous volume of memory is the serious providing point, according to Su.

“If you glance at the marketplace today, you usually see that, initially of all, the design sizes are getting much much larger. And you truly want many GPUs to run the hottest substantial language types,” she explained. “With MI300X, you can decrease the quantity of GPUs for this, and as design dimensions keep on rising, this will become even additional significant. So with more memory, extra memory bandwidth, and less GPUs necessary.”

AMD suggests the design and style of the MI300X tends to make it 8 periods more powerful than the current MI250X utilized in Frontier (the world’s quickest supercomputer) and five times far more power efficient. It will be utilized in the two-moreover exaFLOP El Capitan system that will be constructed up coming yr at Lawrence Livermore Nationwide Labs.

As aspect of the announcement, Su also unveiled the AMD Intuition system, a server reference structure based on specs from the Open up Compute Undertaking that uses 8 MI300X GPUs for generative AI instruction and inference workloads.

This suggests enterprises and hyperscalers can use the Instinct System to put MI300X GPUs in current OCP server racks.

“We’re really accelerating customers’ time to market place and decreasing all round advancement prices, although generating it actually straightforward to deploy the MI300X into their current AI ramp and server construction,” Su claimed.

New cloud CPU

In other information, AMD talked about its fourth-gen EPYC 97X4 processor, code-named Bergamo. This processor is especially made for cloud environments in that it has lots of cores for digital equipment to operate. Bergamo comes with 128 cores with hyperthreading, so a dual-socket program can have up to 512 virtual CPUs.

Su talked about how cloud-indigenous workloads are “born in the cloud.” They are made to choose total gain of new cloud computing frameworks, and they primarily operate as microservices. The structure of these processors is various from normal goal computing – Bergamo processors are scaled-down and quite throughput-oriented, that’s why the several main design and style.

“Bergamo leverages all of the platform infrastructure that we currently developed for Genoa. And it supports the same upcoming gen memory and the exact same IO capabilities. But it allows us with this structure issue to expand to 128 cores per socket for leadership overall performance and power efficiency in the cloud,” mentioned Su.

Both equally the MI300X and Bergamo will get started sampling in the third quarter.

Copyright © 2023 IDG Communications, Inc.

[ad_2]

Supply backlink