[ad_1]

Machine finding out (ML) provides huge potential, from diagnosing cancer to engineering harmless self-driving automobiles to amplifying human efficiency. To recognize this potential, however, companies will need ML methods to be responsible with ML answer improvement that is predictable and tractable. The essential to both equally is a deeper knowledge of ML details — how to engineer training datasets that develop high excellent versions and examination datasets that provide precise indicators of how near we are to resolving the focus on problem.

The method of producing large good quality datasets is intricate and error-prone, from the first range and cleansing of raw facts, to labeling the details and splitting it into teaching and exam sets. Some specialists feel that the greater part of the exertion in creating an ML technique is really the sourcing and preparing of information. Every move can introduce challenges and biases. Even a lot of of the regular datasets we use now have been proven to have mislabeled info that can destabilize founded ML benchmarks. Even with the fundamental value of knowledge to ML, it’s only now starting to obtain the same amount of interest that products and understanding algorithms have been having fun with for the past decade.

To this aim, we are introducing DataPerf, a set of new information-centric ML worries to advance the state-of-the-art in facts assortment, planning, and acquisition technologies, developed and designed through a wide collaboration across marketplace and academia. The preliminary version of DataPerf is composed of 4 challenges focused on 3 popular facts-centric duties throughout three software domains eyesight, speech and all-natural language processing (NLP). In this blogpost, we outline dataset progress bottlenecks confronting scientists and go over the part of benchmarks and leaderboards in incentivizing scientists to handle these problems. We invite innovators in academia and market who search for to measure and validate breakthroughs in facts-centric ML to demonstrate the power of their algorithms and techniques to generate and enhance datasets via these benchmarks.

Info is the new bottleneck for ML

Knowledge is the new code: it is the education details that establishes the maximum doable excellent of an ML alternative. The model only determines the diploma to which that optimum high-quality is realized in a perception the design is a lossy compiler for the knowledge. Although large-excellent teaching datasets are crucial to ongoing advancement in the field of ML, much of the knowledge on which the subject depends these days is just about a ten years outdated (e.g., ImageNet or LibriSpeech) or scraped from the world wide web with extremely limited filtering of articles (e.g., LAION or The Pile).

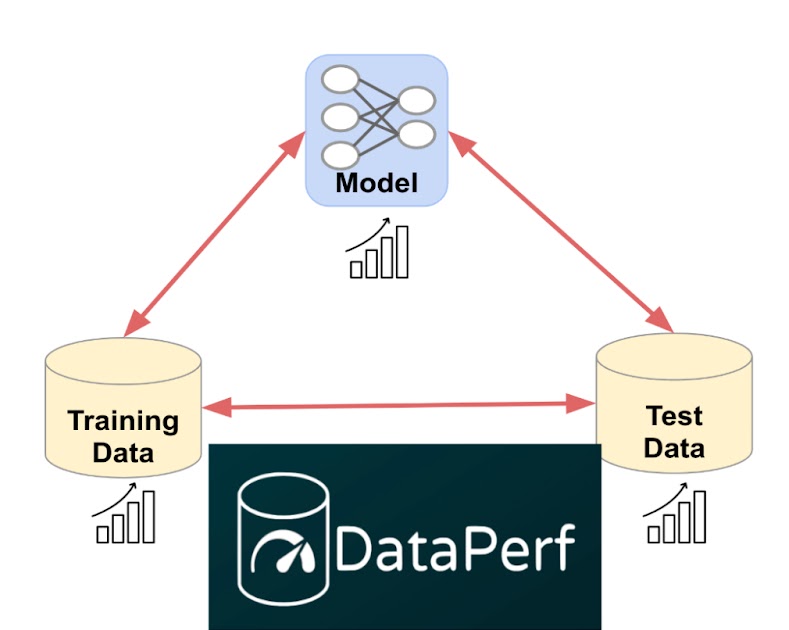

Even with the relevance of facts, ML exploration to date has been dominated by a emphasis on versions. Right before contemporary deep neural networks (DNNs), there had been no ML styles ample to match human actions for many uncomplicated tasks. This starting issue led to a design-centric paradigm in which (1) the coaching dataset and exam dataset were “frozen” artifacts and the target was to build a much better model, and (2) the examination dataset was selected randomly from the very same pool of details as the education set for statistical reasons. Sad to say, freezing the datasets disregarded the capacity to make improvements to education accuracy and performance with greater details, and utilizing check sets drawn from the exact same pool as training facts conflated fitting that data very well with in fact resolving the underlying problem.

For the reason that we are now creating and deploying ML remedies for significantly advanced tasks, we require to engineer exam sets that fully capture true globe complications and schooling sets that, in combination with superior types, deliver effective alternatives. We require to change from today’s model-centric paradigm to a information-centric paradigm in which we realize that for the vast majority of ML builders, creating significant good quality coaching and take a look at facts will be a bottleneck.

|

| Shifting from today’s design-centric paradigm to a knowledge-centric paradigm enabled by top quality datasets and facts-centric algorithms like those measured in DataPerf. |

Enabling ML builders to generate far better instruction and check datasets will involve a deeper understanding of ML knowledge good quality and the improvement of algorithms, tools, and methodologies for optimizing it. We can get started by recognizing popular problems in dataset development and creating functionality metrics for algorithms that deal with people challenges. For instance:

- Info choice: Normally, we have a much larger pool of out there facts than we can label or practice on successfully. How do we decide on the most critical information for schooling our versions?

- Data cleaning: Human labelers in some cases make problems. ML builders just cannot find the money for to have industry experts examine and right all labels. How can we pick the most probably-to-be-mislabeled data for correction?

We can also create incentives that reward good dataset engineering. We foresee that higher high-quality instruction information, which has been carefully chosen and labeled, will develop into a important solution in a lot of industries but presently lack a way to evaluate the relative value of distinctive datasets with out really instruction on the datasets in dilemma. How do we fix this trouble and empower top quality-driven “data acquisition”?

DataPerf: The to start with leaderboard for facts

We feel good benchmarks and leaderboards can push swift development in data-centric technological innovation. ML benchmarks in academia have been vital to stimulating progress in the discipline. Look at the adhering to graph which shows development on common ML benchmarks (MNIST, ImageNet, SQuAD, GLUE, Switchboard) above time:

|

| Performance above time for common benchmarks, normalized with original general performance at minus 1 and human effectiveness at zero. (Supply: Douwe, et al. 2021 made use of with authorization.) |

On the web leaderboards supply official validation of benchmark effects and catalyze communities intent on optimizing all those benchmarks. For instance, Kaggle has above 10 million registered end users. The MLPerf official benchmark success have aided generate an around 16x improvement in education general performance on key benchmarks.

DataPerf is the to start with neighborhood and platform to create leaderboards for data benchmarks, and we hope to have an analogous affect on investigate and progress for knowledge-centric ML. The preliminary version of DataPerf consists of leaderboards for four worries concentrated on a few info-centric duties (info assortment, cleaning, and acquisition) throughout 3 application domains (vision, speech and NLP):

- Coaching facts collection (Eyesight): Design and style a details assortment strategy that chooses the greatest schooling established from a large applicant pool of weakly labeled schooling images.

- Coaching knowledge choice (Speech): Design and style a facts selection technique that chooses the ideal schooling established from a large applicant pool of automatically extracted clips of spoken text.

- Coaching facts cleaning (Vision): Structure a info cleansing approach that chooses samples to relabel from a “noisy” schooling set in which some of the labels are incorrect.

- Teaching dataset analysis (NLP): Good quality datasets can be expensive to assemble, and are getting important commodities. Design and style a information acquisition system that chooses which instruction dataset to “buy” primarily based on confined information about the knowledge.

For each obstacle, the DataPerf web site delivers layout paperwork that define the problem, test product(s), good quality goal, guidelines and suggestions on how to run the code and submit. The stay leaderboards are hosted on the Dynabench system, which also provides an online evaluation framework and submission tracker. Dynabench is an open up-source venture, hosted by the MLCommons Association, focused on enabling knowledge-centric leaderboards for both of those schooling and exam facts and knowledge-centric algorithms.

How to get associated

We are part of a local community of ML researchers, information researchers and engineers who try to increase info excellent. We invite innovators in academia and market to measure and validate details-centric algorithms and techniques to develop and strengthen datasets through the DataPerf benchmarks. The deadline for the initial round of challenges is May well 26th, 2023.

Acknowledgements

The DataPerf benchmarks had been produced around the final 12 months by engineers and scientists from: Coactive.ai, Eidgenössische Technische Hochschule (ETH) Zurich, Google, Harvard College, Meta, ML Commons, Stanford University. In addition, this would not have been possible devoid of the guidance of DataPerf operating team associates from Carnegie Mellon College, Electronic Prism Advisors, Factored, Hugging Facial area, Institute for Human and Machine Cognition, Landing.ai, San Diego Supercomputing Center, Thomson Reuters Lab, and TU Eindhoven.

[ad_2]

Source website link