[ad_1]

Application isn’t designed in one spectacular action. It enhances little bit by little bit, just one small move at a time — modifying, operating unit checks, correcting create faults, addressing code assessments, editing some much more, appeasing linters, and repairing extra mistakes — till ultimately it will become very good more than enough to merge into a code repository. Application engineering isn’t an isolated method, but a dialogue amongst human builders, code reviewers, bug reporters, software program architects and tools, these types of as compilers, unit exams, linters and static analyzers.

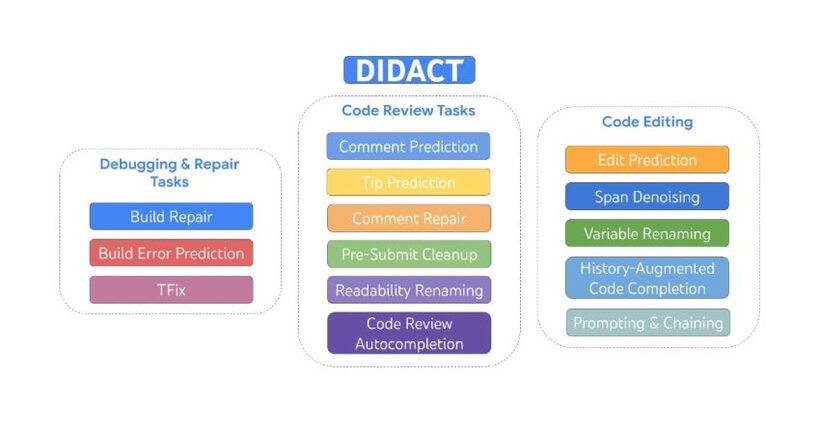

Right now we describe DIDACT (Dynamic Built-in Developer Activity), which is a methodology for instruction significant equipment learning (ML) designs for software program growth. The novelty of DIDACT is that it uses the process of application growth as the source of coaching knowledge for the model, relatively than just the polished close condition of that method, the completed code. By exposing the product to the contexts that builders see as they function, paired with the actions they consider in response, the product learns about the dynamics of software improvement and is much more aligned with how builders devote their time. We leverage instrumentation of Google’s program improvement to scale up the amount and diversity of developer-activity information outside of previous will work. Final results are exceptionally promising alongside two proportions: usefulness to specialist software package developers, and as a likely foundation for imbuing ML designs with normal program improvement abilities.

|

| DIDACT is a multi-process model educated on improvement pursuits that include things like modifying, debugging, repair service, and code critique. |

We constructed and deployed internally 3 DIDACT equipment, Comment Resolution (which we not long ago introduced), Establish Maintenance, and Tip Prediction, each individual built-in at different stages of the progress workflow. All three of these applications acquired enthusiastic feed-back from countless numbers of internal builders. We see this as the final check of usefulness: do expert builders, who are frequently professionals on the code foundation and who have cautiously honed workflows, leverage the applications to strengthen their efficiency?

Perhaps most excitingly, we display how DIDACT is a initial phase to a normal-goal developer-help agent. We display that the skilled product can be applied in a wide variety of surprising ways, by means of prompting with prefixes of developer things to do, and by chaining together several predictions to roll out lengthier exercise trajectories. We imagine DIDACT paves a promising route towards producing agents that can normally guide throughout the software package growth course of action.

A treasure trove of data about the software program engineering approach

Google’s application engineering toolchains shop each and every operation linked to code as a log of interactions among instruments and developers, and have carried out so for a long time. In theory, a person could use this record to replay in element the critical episodes in the “software engineering video” of how Google’s codebase arrived to be, action-by-step — one particular code edit, compilation, comment, variable rename, and so forth., at a time.

Google code lives in a monorepo, a single repository of code for all tools and methods. A software package developer commonly experiments with code improvements in a local duplicate-on-write workspace managed by a method referred to as Shoppers in the Cloud (CitC). When the developer is ready to deal a established of code adjustments jointly for a distinct reason (e.g., repairing a bug), they create a changelist (CL) in Critique, Google’s code-evaluate process. As with other sorts of code-critique methods, the developer engages in a dialog with a peer reviewer about functionality and design. The developer edits their CL to deal with reviewer comments as the dialog progresses. Eventually, the reviewer declares “LGTM!” (“looks fantastic to me”), and the CL is merged into the code repository.

Of system, in addition to a dialog with the code reviewer, the developer also maintains a “dialog” of types with a plethora of other computer software engineering applications, these as the compiler, the screening framework, linters, static analyzers, fuzzers, and so forth.

A multi-activity model for application engineering

DIDACT utilizes interactions between engineers and tools to energy ML products that aid Google builders, by suggesting or boosting actions builders just take — in context — though pursuing their software package-engineering responsibilities. To do that, we have outlined a number of responsibilities about individual developer routines: repairing a broken build, predicting a code-evaluation comment, addressing a code-review remark, renaming a variable, enhancing a file, and so on. We use a frequent formalism for each and every activity: it can take some Point out (a code file), some Intent (annotations particular to the action, these kinds of as code-evaluation remarks or compiler mistakes), and provides an Action (the procedure taken to deal with the job). This Motion is like a mini programming language, and can be prolonged for recently added pursuits. It handles things like modifying, adding remarks, renaming variables, marking up code with glitches, etc. We connect with this language DevScript.

|

| The DIDACT design is prompted with a activity, code snippets, and annotations connected to that undertaking, and generates progress steps, e.g., edits or remarks. |

This state-intent-action formalism enables us to capture several various responsibilities in a basic way. What’s much more, DevScript is a concise way to specific complicated steps, without the need to output the whole point out (the original code) as it would be after the action will take location this makes the model extra efficient and much more interpretable. For case in point, a rename could possibly contact a file in dozens of areas, but a model can predict a solitary rename action.

An ML peer programmer

DIDACT does a very good position on person assistive responsibilities. For instance, down below we clearly show DIDACT executing code clear-up soon after functionality is mostly done. It looks at the code together with some last opinions by the code reviewer (marked with “human” in the animation), and predicts edits to address those people remarks (rendered as a diff).

The multimodal character of DIDACT also offers increase to some surprising capabilities, reminiscent of behaviors rising with scale. A person this sort of functionality is historical past augmentation, which can be enabled through prompting. Figuring out what the developer did not long ago enables the model to make a much better guess about what the developer really should do next.

|

| An illustration of historical past-augmented code completion in action. |

A potent these kinds of activity exemplifying this capability is background-augmented code completion. In the figure underneath, the developer provides a new operate parameter (1), and moves the cursor into the documentation (2). Conditioned on the heritage of developer edits and the cursor place, the model completes the line (3) by appropriately predicting the docstring entry for the new parameter.

|

| An illustration of edit prediction, more than a number of chained iterations. |

In an even much more potent record-augmented activity, edit prediction, the product can opt for the place to edit up coming in a manner that is traditionally consistent. If the developer deletes a purpose parameter (1), the design can use background to effectively predict an update to the docstring (2) that removes the deleted parameter (with no the human developer manually placing the cursor there) and to update a assertion in the function (3) in a syntactically (and — arguably — semantically) appropriate way. With heritage, the design can unambiguously determine how to keep on the “editing video” the right way. Without record, the model wouldn’t know whether the missing purpose parameter is intentional (since the developer is in the system of a extended edit to clear away it) or accidental (in which circumstance the model ought to re-increase it to repair the difficulty).

The design can go even further. For case in point, we began with a blank file and asked the design to successively forecast what edits would occur up coming right up until it had written a total code file. The astonishing aspect is that the model produced code in a move-by-step way that would seem purely natural to a developer: It started off by initial creating a fully performing skeleton with imports, flags, and a standard most important operate. It then incrementally extra new performance, like reading from a file and creating results, and additional functionality to filter out some strains based on a consumer-delivered standard expression, which required improvements across the file, like including new flags.

Conclusion

DIDACT turns Google’s program enhancement procedure into education demonstrations for ML developer assistants, and takes advantage of individuals demonstrations to coach versions that construct code in a move-by-stage vogue, interactively with tools and code reviewers. These innovations are presently powering applications liked by Google builders each and every working day. The DIDACT method enhances the terrific strides taken by large language versions at Google and in other places, in direction of systems that ease toil, strengthen productiveness, and greatly enhance the high-quality of function of program engineers.

Acknowledgements

This perform is the consequence of a multi-year collaboration amongst Google Exploration, Google Main Units and Experiences, and DeepMind. We would like to admit our colleagues Jacob Austin, Pascal Lamblin, Pierre-Antoine Manzagol, and Daniel Zheng, who join us as the key drivers of this challenge. This do the job could not have occurred with out the important and sustained contributions of our partners at Alphabet (Peter Choy, Henryk Michalewski, Subhodeep Moitra, Malgorzata Salawa, Vaibhav Tulsyan, and Manushree Vijayvergiya), as well as the quite a few people who gathered data, recognized jobs, designed goods, strategized, evangelized, and assisted us execute on the numerous aspects of this agenda (Ankur Agarwal, Paige Bailey, Marc Brockschmidt, Rodrigo Damazio Bovendorp, Satish Chandra, Savinee Dancs, Matt Frazier, Alexander Frömmgen, Nimesh Ghelani, Chris Gorgolewski, Chenjie Gu, Vincent Hellendoorn, Franjo Ivančić, Marko Ivanković, Emily Johnston, Luka Kalinovcic, Lera Kharatyan, Jessica Ko, Markus Kusano, Kathy Nix, Sara Qu, Marc Rasi, Marcus Revaj, Ballie Sandhu, Michael Sloan, Tom Small, Gabriela Surita, Maxim Tabachnyk, David Tattersall, Sara Toth, Kevin Villela, Sara Wiltberger, and Donald Duo Zhao) and our incredibly supportive leadership (Martín Abadi, Joelle Barral, Jeff Dean, Madhura Dudhgaonkar, Douglas Eck, Zoubin Ghahramani, Hugo Larochelle, Chandu Thekkath, and Niranjan Tulpule). Thank you!

[ad_2]

Supply hyperlink