[ad_1]

Capitalize on GPT-4’s outstanding knowledge and competencies and its ability to process more substantial texts, presently now on your website apps. Also know some cons of this new product, as unveiled by my individual checks.

As we were being all expecting immediately after Microsoft’s bulletins previous week, OpenAI introduced this 7 days its new substantial language model, termed GPT-4, which basically exists in various flavors. Yes, almost everything moves quite quickly in fact, so quickly that OpenAI previously deployed GPT-4 for programmatic accessibility using the Chat Completion endpoint of their API. This implies we programmers can now use it in your plans or, as I showcase in my examples, immediately in your web apps.

In the formal presentation of GPT-4, OpenAI explained that this new product could clear up tough challenges with increased precision than prior products, which includes the regular ChatGPT, many thanks to its broader common information and its highly developed “reasoning” capabilities. The presentation also discovered that GPT-4 could method blocks of text 8 instances larger than people that ChatGPT accepts and that it accepts not only textual content inputs but also photos, which it can fully grasp and explain in rational techniques.

Here I’ll exhibit you how to use GPT-4’s textual content processing abilities ideal on your world wide web webpages using vanilla JavaScript. And I will share some appealing findings, equally on the favourable and the adverse sides.

If you want to know far more about this new language design before you delve into supply code, go see this other article and occur back again for fun:

The direct way to use GPT-4 in your world wide web apps is extremely comparable to what I showed you in this preceding posting, centered about GPT-3.5-turbo, which is the model driving the 1st model of ChatGPT:

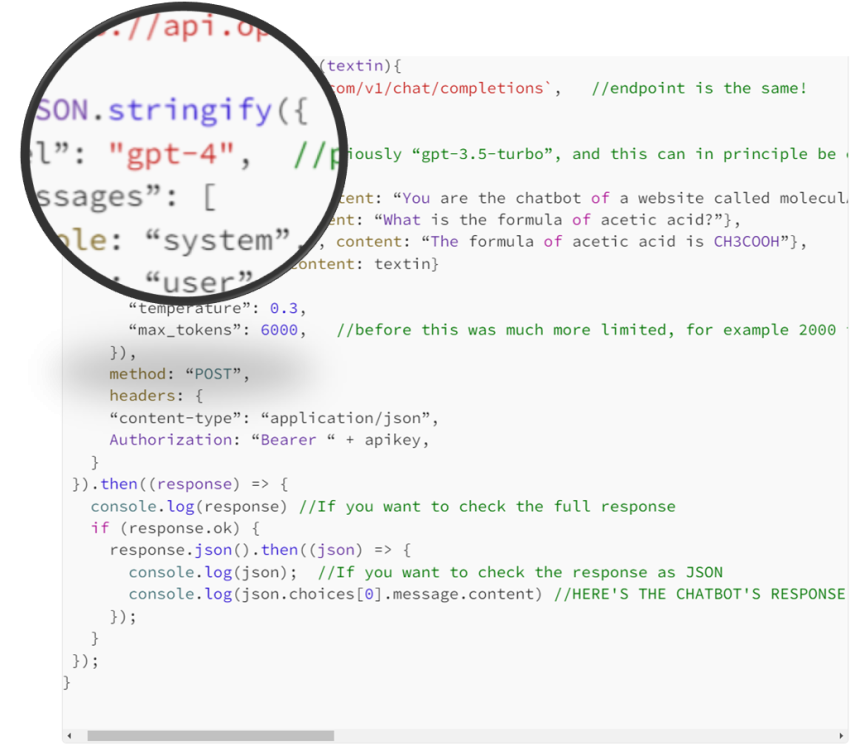

The core source code is this, exactly where I’ve commented notes these as improvements relative to the supply code applied to operate gpt-3.5-turbo:

var processinput = function(textin){

fetch( `https://api.openai.com/v1/chat/completions`, //endpoint is the similar!

body: JSON.stringify(

“model”: "gpt-4", //earlier “gpt-3.5-turbo”, and this can in theory be other flavors of GPT-4

“messages”: [

role: “system”, content: “You are the chatbot of a website called moleculARweb, which provides educational material for chemistry using commodity augmented reality. You answer questions about the website, about chemistry, science, etc.”,

role: “user”, content: “What is the formula of acetic acid?”,

role: “assistant”, content: “The formula of acetic acid is CH3COOH”,

role: “user”, content: textin

],

“temperature”: .3,

“max_tokens”: 6000, //before this was much far more confined, for instance 2000 tokens

),

strategy: “POST”,

headers:

“content-type”: “application/json”,

Authorization: “Bearer “ + apikey,

).then((reaction) =>

console.log(response) //If you want to check the full reaction

if (response.ok)

reaction.json().then((json) =>

console.log(json) //If you want to check out the response as JSON

console.log(json.decisions[0].message.material) //Here's THE CHATBOT'S Response

)

)

}

Similar to former posts in which I discussed how to contact GPT-3’s API in JavaScript (for case in point, listed here, here, and in this article), I’m employing a fetch() phone that sends the API essential and texts to the motor by Publish. So the core is similar to what you can see in this article:

But you have found that for gpt-3.5-turbo and gpt-4 the enter prompts need to be formatted in a particular way, distinctive from that made use of in frequent GPT-3 calls. You need to make an array of messages that consist of information about the “personality” of the chatbot (part “system”) and examples of messages exchanged concerning a person and the bot (role “user” and “assistant”).

I improved my previously chatbot directions to reply especially about by itself and about me by utilizing info that I give specially in the program. In this article you have an instance discussion:

Appealing notes, equally optimistic and damaging

By jogging this new chatbot, I could come across out that it can correctly browse and compose longer texts (as you see in the examples earlier mentioned) even so, this arrives at the price tag of pace. Certainly, in my tests, GPT-4 was to some degree slower than GPT-3.5-turbo.

Moreover, GPT-4 is 30 situations additional highly-priced than GPT-3.5-turbo, per token: 6 cents vs. .2 cents for each and every 1k tokens.

GPT-4 can correctly deal with and develop much larger texts than its antecesor GPT-3.5-turbo, but it is to some degree slower and a lot much more expensive.

[ad_2]

Source url