[ad_1]

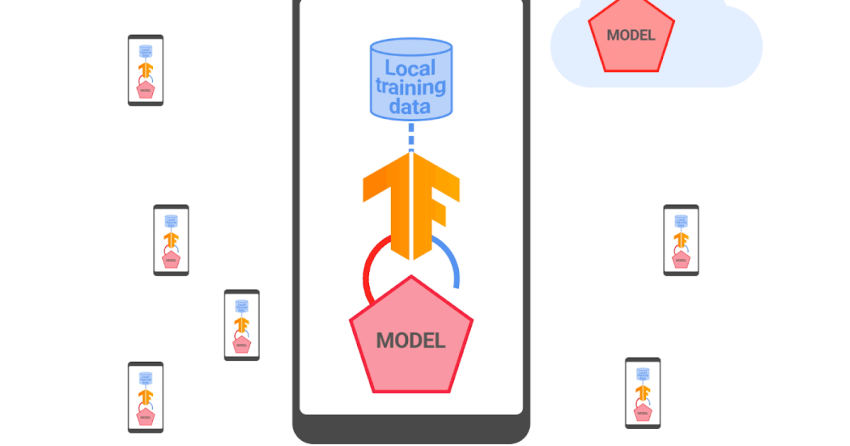

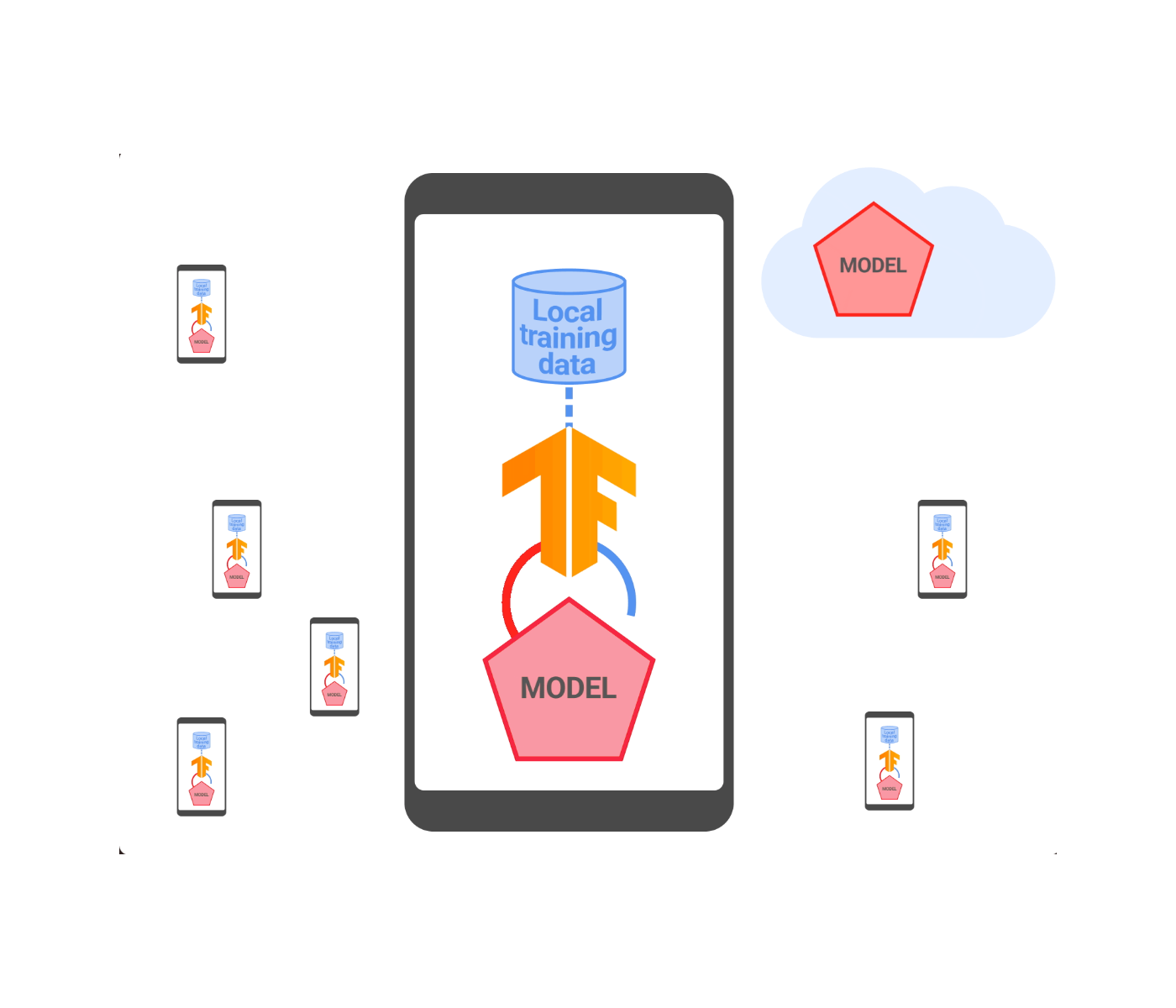

Federated mastering is a dispersed way of coaching equipment learning (ML) versions where details is regionally processed and only centered product updates and metrics that are meant for fast aggregation are shared with a server that orchestrates teaching. This makes it possible for the instruction of models on locally obtainable signals without exposing raw details to servers, rising person privateness. In 2021, we declared that we are using federated finding out to prepare Intelligent Textual content Collection styles, an Android element that aids end users pick and copy textual content quickly by predicting what textual content they want to choose and then mechanically growing the choice for them.

Considering that that start, we have worked to boost the privacy ensures of this technologies by meticulously combining safe aggregation (SecAgg) and a distributed model of differential privacy. In this publish, we explain how we constructed and deployed the initially federated learning method that presents official privateness guarantees to all consumer knowledge right before it turns into noticeable to an genuine-but-curious server, meaning a server that follows the protocol but could consider to acquire insights about people from data it gets. The Wise Textual content Variety products educated with this process have diminished memorization by extra than two-fold, as measured by normal empirical testing methods.

Scaling safe aggregation

Info minimization is an vital privateness basic principle behind federated learning. It refers to centered details selection, early aggregation, and minimal info retention demanded for the duration of teaching. While just about every gadget collaborating in a federated understanding round computes a design update, the orchestrating server is only intrigued in their common. Therefore, in a planet that optimizes for data minimization, the server would discover almost nothing about person updates and only receive an aggregate model update. This is precisely what the SecAgg protocol achieves, beneath arduous cryptographic ensures.

Vital to this work, two the latest improvements have enhanced the performance and scalability of SecAgg at Google:

- An improved cryptographic protocol: Right until lately, a considerable bottleneck in SecAgg was shopper computation, as the function necessary on each product scaled linearly with the total range of shoppers (N) collaborating in the round. In the new protocol, shopper computation now scales logarithmically in N. This, alongside with equivalent gains in server expenses, benefits in a protocol capable to handle greater rounds. Acquiring far more users participate in each individual spherical enhances privacy, the two empirically and formally.

- Optimized customer orchestration: SecAgg is an interactive protocol, wherever participating devices development collectively. An significant feature of the protocol is that it is robust to some units dropping out. If a consumer does not send out a reaction in a predefined time window, then the protocol can continue on with no that client’s contribution. We have deployed statistical techniques to proficiently vehicle-tune these kinds of a time window in an adaptive way, resulting in improved protocol throughput.

The higher than enhancements produced it less difficult and speedier to prepare Intelligent Textual content Choice with stronger facts minimization guarantees.

Aggregating anything through protected aggregation

A regular federated coaching method not only requires aggregating design updates but also metrics that describe the effectiveness of the community coaching. These are important for knowing model conduct and debugging probable education issues. In federated education for Smart Text Variety, all model updates and metrics are aggregated by means of SecAgg. This habits is statically asserted working with TensorFlow Federated, and regionally enforced in Android’s Private Compute Main secure surroundings. As a end result, this improves privateness even extra for people coaching Smart Textual content Collection, simply because unaggregated model updates and metrics are not seen to any aspect of the server infrastructure.

Differential privacy

SecAgg allows lessen knowledge publicity, but it does not always develop aggregates that assure against revealing everything distinctive to an particular person. This is wherever differential privateness (DP) arrives in. DP is a mathematical framework that sets a limit on an individual’s influence on the consequence of a computation, this sort of as the parameters of a ML product. This is completed by bounding the contribution of any unique user and including sound during the teaching procedure to produce a chance distribution about output designs. DP will come with a parameter (ε) that quantifies how significantly the distribution could modify when adding or taking away the schooling illustrations of any specific person (the scaled-down the better).

Recently, we declared a new method of federated coaching that enforces official and meaningfully robust DP assures in a centralized manner, where a dependable server controls the education process. This guards in opposition to exterior attackers who may perhaps attempt to analyze the product. Nonetheless, this method nonetheless depends on have confidence in in the central server. To deliver even increased privacy protections, we have established a program that utilizes distributed differential privateness (DDP) to implement DP in a dispersed manner, integrated within just the SecAgg protocol.

Distributed differential privateness

DDP is a technological innovation that gives DP ensures with regard to an truthful-but-curious server coordinating instruction. It is effective by obtaining each and every participating system clip and sound its update regionally, and then aggregating these noisy clipped updates as a result of the new SecAgg protocol described over. As a consequence, the server only sees the noisy sum of the clipped updates.

Even so, the combination of neighborhood sound addition and use of SecAgg provides substantial worries in apply:

- An improved discretization approach: A single obstacle is properly representing model parameters as integers in SecAgg’s finite group with integer modular arithmetic, which can inflate the norm of the discretized product and require additional sound for the same privacy amount. For case in point, randomized rounding to the nearest integers could inflate the user’s contribution by a aspect equal to the number of product parameters. We dealt with this by scaling the design parameters, implementing a random rotation, and rounding to closest integers. We also made an strategy for automobile-tuning the discretization scale all through coaching. This led to an even far more effective and correct integration in between DP and SecAgg.

- Optimized discrete sounds addition: A different obstacle is devising a plan for deciding upon an arbitrary number of bits for each design parameter without having sacrificing conclusion-to-close privateness ensures, which rely on how the design updates are clipped and noised. To tackle this, we additional integer noise in the discretized area and analyzed the DP properties of sums of integer noise vectors making use of the distributed discrete Gaussian and distributed Skellam mechanisms.

|

| An overview of federated discovering with dispersed differential privateness. |

We examined our DDP solution on a wide variety of benchmark datasets and in output and validated that we can match the precision to central DP with a SecAgg finite team of dimension 12 bits per design parameter. This intended that we were in a position to achieve extra privacy advantages though also minimizing memory and conversation bandwidth. To exhibit this, we used this know-how to coach and start Wise Text Variety products. This was carried out with an correct sum of sounds picked to maintain design excellent. All Clever Text Assortment products qualified with federated discovering now appear with DDP assures that use to each the model updates and metrics found by the server for the duration of schooling. We have also open up sourced the implementation in TensorFlow Federated.

Empirical privateness screening

When DDP provides formal privateness assures to Good Textual content Variety, all those formal ensures are somewhat weak (a finite but big ε, in the hundreds). Nevertheless, any finite ε is an advancement around a product with no formal privateness promise for various factors: 1) A finite ε moves the design into a routine in which even further privateness improvements can be quantified and 2) even significant ε’s can indicate a substantial minimize in the ability to reconstruct instruction knowledge from the qualified design. To get a additional concrete comprehending of the empirical privacy pros, we carried out extensive analyses by applying the Secret Sharer framework to Good Text Range models. Key Sharer is a design auditing technique that can be utilised to evaluate the degree to which types unintentionally memorize their training data.

To execute Secret Sharer analyses for Sensible Textual content Selection, we set up handle experiments which gather gradients making use of SecAgg. The cure experiments use distributed differential privateness aggregators with diverse amounts of sounds.

We observed that even very low quantities of sounds reduce memorization meaningfully, additional than doubling the Magic formula Sharer rank metric for applicable canaries when compared to the baseline. This usually means that even although the DP ε is big, we empirically verified that these amounts of noise already enable lessen memorization for this model. Nonetheless, to further boost on this and to get more powerful formal guarantees, we goal to use even larger sized noise multipliers in the upcoming.

Up coming actions

We developed and deployed the initially federated finding out and distributed differential privateness technique that arrives with formal DP guarantees with regard to an straightforward-but-curious server. While giving substantial more protections, a totally malicious server could even now be equipped to get about the DDP guarantees possibly by manipulating the general public essential exchange of SecAgg or by injecting a adequate variety of “phony” malicious consumers that do not insert the prescribed sounds into the aggregation pool. We are fired up to deal with these challenges by continuing to fortify the DP warranty and its scope.

Acknowledgements

The authors would like to thank Adria Gascon for important effects on the website write-up alone, as effectively as the people today who assisted develop these tips and bring them to apply: Ken Liu, Jakub Konečný, Brendan McMahan, Naman Agarwal, Thomas Steinke, Christopher Choquette, Adria Gascon, James Bell, Zheng Xu, Asela Gunawardana, Kallista Bonawitz, Mariana Raykova, Stanislav Chiknavaryan, Tancrède Lepoint, Shanshan Wu, Yu Xiao, Zachary Charles, Chunxiang Zheng, Daniel Ramage, Galen Andrew, Hugo Track, Chang Li, Sofia Neata, Ananda Theertha Suresh, Timon Van Overveldt, Zachary Garrett, Wennan Zhu, and Lukas Zilka. We’d also like to thank Tom Little for generating the animated figure.

[ad_2]

Supply hyperlink