[ad_1]

Persons concur: accelerated computing is strength-effective computing.

The Nationwide Electricity Investigate Scientific Computing Middle (NERSC), the U.S. Section of Energy’s direct facility for open up science, measured results across four of its essential high effectiveness computing and AI programs.

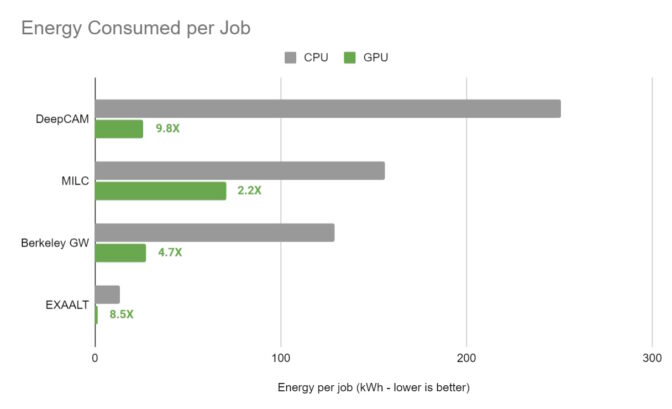

They clocked how quick the programs ran and how considerably electricity they consumed on CPU-only and GPU-accelerated nodes on Perlmutter, a single of the world’s most significant supercomputers applying NVIDIA GPUs.

The final results were being crystal clear. Accelerated with NVIDIA A100 Tensor Core GPUs, strength effectiveness rose 5x on average. An software for climate forecasting logged gains of 9.8x.

GPUs Conserve Megawatts

On a server with 4 A100 GPUs, NERSC acquired up to 12x speedups in excess of a dual-socket x86 server.

That means, at the exact functionality degree, the GPU-accelerated program would eat 588 megawatt-several hours significantly less vitality for each thirty day period than a CPU-only technique. Running the exact same workload on a four-way NVIDIA A100 cloud instance for a month, researchers could conserve additional than $4 million as opposed to a CPU-only occasion.

Measuring Genuine-Planet Programs

The benefits are important mainly because they are based on measurements of genuine-entire world purposes, not artificial benchmarks.

The gains necessarily mean that the 8,000+ researchers working with Perlmutter can tackle greater issues, opening the doorway to a lot more breakthroughs.

Among the the several use cases for the more than 7,100 A100 GPUs on Perlmutter, researchers are probing subatomic interactions to obtain new inexperienced power sources.

Advancing Science at Just about every Scale

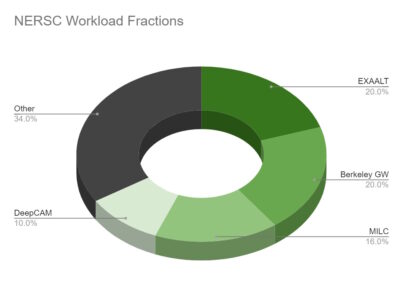

The apps NERSC analyzed span molecular dynamics, content science and weather forecasting.

For example, MILC simulates the elementary forces that keep particles collectively in an atom. It’s utilised to progress quantum computing, research dim make any difference and research for the origins of the universe.

BerkeleyGW assists simulate and forecast optical homes of resources and nanostructures, a essential move towards developing much more economical batteries and digital units.

EXAALT, which bought an 8.5x performance attain on A100 GPUs, solves a fundamental challenge in molecular dynamics. It allows scientists simulate the equivalent of quick videos of atomic actions somewhat than the sequences of snapshots other tools supply.

The fourth application in the assessments, DeepCAM, is made use of to detect hurricanes and atmospheric rivers in climate data. It received a 9.8x get in strength efficiency when accelerated with A100 GPUs.

Personal savings With Accelerated Computing

The NERSC results echo previously calculations of the opportunity financial savings with accelerated computing. For illustration, in a independent analysis NVIDIA performed, GPUs sent 42x far better electricity efficiency on AI inference than CPUs.

That implies switching all the CPU-only servers running AI worldwide to GPU-accelerated techniques could help you save a whopping 10 trillion watt-hrs of strength a yr. That’s like conserving the vitality 1.4 million residences consume in a yr.

Accelerating the Organization

You really don’t have to be a scientist to get gains in power efficiency with accelerated computing.

Pharmaceutical organizations are making use of GPU-accelerated simulation and AI to velocity the process of drug discovery. Carmakers like BMW Team are making use of it to product complete factories.

They’re amongst the expanding ranks of enterprises at the forefront of what NVIDIA founder and CEO Jensen Huang calls an industrial HPC revolution, fueled by accelerated computing and AI.

[ad_2]

Resource url