[ad_1]

Image by Editor

Snowflake is a SaaS, i.e., software as a company that is nicely suited for running analytics on substantial volumes of info. The platform is supremely uncomplicated to use and is perfectly suited for small business people, analytics groups, and so forth., to get benefit from the at any time-escalating datasets. This posting will go through the components of making a streaming semi-structured analytics system on Snowflake for healthcare knowledge. We will also go as a result of some critical issues during this stage.

There are a good deal of distinct knowledge formats that the healthcare marketplace as a complete supports but we will take into consideration a person of the most recent semi-structured formats i.e. FHIR (Rapidly Healthcare Interoperability Methods) for constructing our analytics system. This format ordinarily possesses all the affected individual-centric details embedded within just 1 JSON document. This structure has a plethora of facts, like all clinic encounters, lab benefits, and many others. The analytics workforce, when provided with a queryable data lake, can extract beneficial info this sort of as how several patients ended up identified with most cancers, etc. Let us go with the assumption that all this sort of JSON documents are pushed on AWS S3 (or any other community cloud storage) every 15 minutes via distinctive AWS services or stop API endpoints.

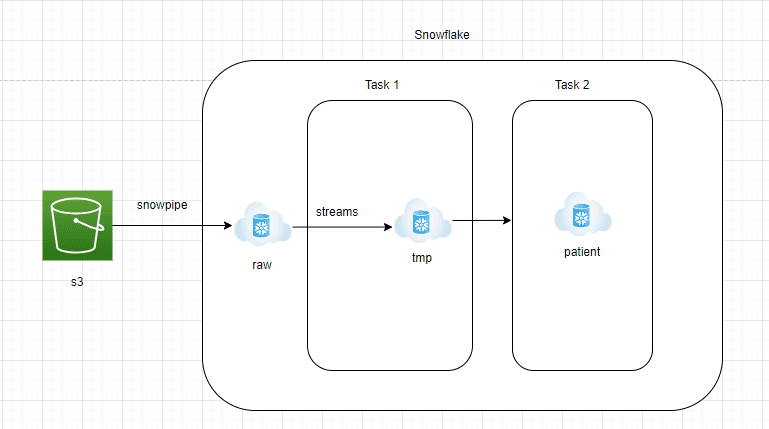

- AWS S3 to Snowflake Uncooked zone:

- Data desires to be constantly streamed from AWS S3 into the Uncooked zone of Snowflake.

- Snowflake offers Snowpipe managed support, which can examine JSON data files from S3 in a constant streaming way.

- A desk with a variant column desires to be created in the Snowflake Uncooked zone to keep the JSON information in the native structure.

- Snowflake Raw Zone to Streams:

- Streams is managed change facts seize provider which will in essence be equipped to capture all the new incoming JSON documents into Snowflake Uncooked zone

- Streams would be pointed to the Snowflake Uncooked Zone desk and should be set to append=genuine

- Streams are just like any table and very easily queryable.

- Snowflake Process 1:

- Snowflake Undertaking is an item that is very similar to a scheduler. Queries or stored methods can be scheduled to run employing cron position notations

- In this architecture, we build Endeavor 1 to fetch the info from Streams and ingest them into a staging desk. This layer would be truncated and reload

- This is done to be certain new JSON paperwork are processed just about every 15 minutes

- Snowflake Task 2:

- This layer will change the raw JSON doc into reporting tables that the analytics crew can effortlessly query.

- To transform JSON files into structured structure, the lateral flatten function of Snowflake can be utilized.

- Lateral flatten is an quick-to-use purpose that explodes the nested array factors and can be effortlessly extracted applying the ‘:’ notation.

- Snowpipe is advised to be used with a couple of huge files. The cost could go large if tiny data files on exterior storage are not clubbed jointly

- In a manufacturing setting, be certain automatic processes are designed to check streams because after they go stale, details can’t be recovered from them

- The greatest permitted size of a one JSON document is 16MB compressed that can be loaded into Snowflake. If you have enormous JSON paperwork that exceed these measurement boundaries, make certain you have a approach to break up them before ingesting them into Snowflake

Managing semi-structured facts is generally hard due to the nested construction of aspects embedded within the JSON files. Take into consideration the gradual and exponential increase of the quantity of incoming facts just before creating the remaining reporting layer. This report aims to reveal how uncomplicated it is to make a streaming pipeline with semi-structured info.

Milind Chaudhari is a seasoned info engineer/info architect who has a 10 years of operate experience in making details lakes/lakehouses applying a variety of typical & modern instruments. He is extremely passionate about information streaming architecture and is also a technical reviewer with Packt & O’Reilly.

[ad_2]

Resource backlink