[ad_1]

In this tutorial, I’ll cover the basics of PyTorch, how to prepare a dataset, construct the network, define training and validation loops, save the model and finally test the saved model

Welcome to my other introduction tutorial on PyTorch! In this tutorial, I will show you how to use PyTorch to classify MNIST digits with convolutional neural networks. In previous tutorials, I demonstrated basic concepts using TensorFlow. However, this time, I will focus on PyTorch and explain how to transition from TensorFlow to PyTorch or where to begin. Before I get into the details, I will introduce PyTorch and its features.

PyTorch is an open-source machine-learning library based on the Torch library. It is primarily developed by Facebook’s AI research division and is widely used for deep learning applications. In this tutorial, we will build a simple digit (MNIST) classifier using PyTorch. We will start by setting up our environment and loading the dataset. We will then define our model and train it on the dataset. Finally, we will test the model and make predictions.

First, let’s talk about the differences between TensorFlow and PyTorch. PyTorch requires writing everything from scratch, which can take time. However, it offers more flexibility, making it a desirable option for some users. PyTorch has a lot of pre-built models, such as ResNet, which can be used for transfer learning. PyTorch also has better documentation and a more straightforward debugging process than TensorFlow.

This tutorial will consist of two parts:

Part 1: Setting up the Environment and Loading the Dataset

- Installing PyTorch and other dependencies

- Loading the MNIST dataset

- Preparing the dataset for training

- Visualizing the dataset

Part 2: Defining and Training the Model

- Defining the model architecture

- Setting up the training loop

- Training the model on the dataset

- Testing the model and making predictions

Prerequisites:

Before we begin, you will need to have the following software installed:

- Python 3;

- torch;

- torchsummary.

To start using PyTorch, you will need to install the CPU version “pip install torch“. You don’t need to worry about the GPU version, as this tutorial is relatively small, but if you want, you can find more information on the PyTorch website.

Preparing the Dataset

After installation, we need to download the MNIST dataset. The code is specific and downloads the data from a given link.

This code downloads specific files into “path='Datasets/mnist'“. We will have training, validation, and validation target batches, with each training batch containing 60,000 images and validation containing 10,000 images.

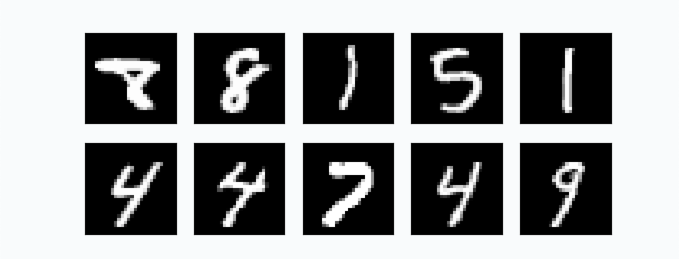

To visualize a few examples from our dataset, we can use the OpenCV python package:

We are resizing images to larger ones simply for visualization because originally, images are 28 by 28 size in pixels, which is very small.

If we checked our data’s shape, we would see the training shape (60000, 28, 28) and the validation shape (10000, 28, 28).

We will normalize these images between zero and one. This will make it easier for the neural network to learn. Additionally, we will expand the dimensions of the images to prepare them for the convolutional layer.

If we checked the shape of our data after these steps, we would see that the training shape now is (60000, 1, 28, 28) and the validation shape (10000, 1, 28, 28).

Still, we can’t train the model on 60000 images at once. We need to create smaller training batches because our CPU or GPU usually can’t handle such a large batch. So, we split our data into sets of 64 items per batch with the following code:

Building the Network

Excellent. At this step, our MNIST dataset is prepared to be used for training and validation. Now we can construct a simple CNN neural network model to train on.

In PyTorch, a new class for the network we wish to build is an excellent way to build a network. We’ll use two 2-D convolutional layers followed by two fully-connected (or linear) layers. As an activation function, we’ll choose rectified linear unit (ReLUs in short), and as a means of regularization, we’ll use two dropout layers. Let’s import a few submodules here for more readable code.

Broadly speaking, we can think of the “torch.nn” layers as which contain trainable parameters while “torch.nn.functional” is purely functional. The forward() pass defines how we compute our output using the given layers and functions. Printing out tensors somewhere in the forward pass is fine for easier debugging. This comes in handy when experimenting with more complex models. Note that the forward pass could make use of, e.g., a member variable or even the data itself to determine the execution path – and it can also make use of multiple arguments.

Now let’s initialize the network and the optimizer.

If we were using a GPU for training, we should have also sent the network parameters to the GPU using, e.g., “network.cuda()“. Transferring the network’s parameters to the appropriate device is essential before passing them to the optimizer. Otherwise, the optimizer needs to keep track of them correctly. But as in this tutorial, we are not focusing on the GPU part; skipping this step and practicing on the CPU is fine.

Our model summary would give us the following results:

Training the Model

Time to build our training loop. First, we want to make sure our network is in training mode. Then we iterate over all training data once per epoch. Loading the individual batches is handled by iterating our training data and targets in the lists. First, we need to manually set the gradients to zero using the optimizer.zero_grad() since PyTorch, by default, accumulates gradients. We then produce the output of our network (forward pass) and compute a negative log-likelihood loss between the output and the ground truth label. In the backward() call, we now collect a new set of gradients which we propagate back into each of the network’s parameters using the optimizer.step(). For more detailed information about the inner workings of PyTorch’s automatic gradient system, see the official docs for autograd (highly recommended).

We’ll also keep track of the training with a beautiful progress bar.

Now for our test loop. Similarly, here, we sum up the test loss and keep track of correctly classified digits to compute the accuracy of the network.

Using the context manager no_grad(), we can avoid storing the computations done, producing the output of our network in the computation graph.

Time to run the training loop for 5 epochs with a simple call:

You should see very similar results:

And that’s it. We achieved 98% accuracy on the test set with just five training epochs!

Neural network modules and optimizers can save and load their internal state using .state_dict(). With this, we can continue the training from previously saved state dicts if needed. But now we will save our trained model state.

Testing the Model’s Performance

Now let’s write a short code that demonstrates how to test the performance of a trained PyTorch model on the MNIST dataset. Here’s a step-by-step tutorial:

1. Import the necessary libraries:

2. Set the path to the downloaded MNIST dataset, and define a fetch function that downloads the dataset if it doesn’t exist and returns it as a numpy array.

3. Load the trained PyTorch model and set it to evaluation mode.

4. Loop over the test images and targets, normalizing and converting each image to a PyTorch tensor, and using the model to predict the corresponding label. Then, resize and display the image with the predicted label as the window name.

5. Finally, the shows us the results, and the loop is broken when the ‘q’ key is pressed.

Although this is a simple example, it is a great starting point for a beginner learning PyTorch. In the next tutorial, we will introduce a PyTorch wrapper to simplify the training process and automate some of the steps we took in this tutorial.

In this tutorial, we have learned how to build a simple digit classifier using PyTorch. We started by setting up our environment and loading the dataset. We then defined our model and trained it on the dataset. Finally, we tested the model and made predictions. This tutorial is a great starting point for beginners who want to learn about PyTorch and deep learning.

Thank you for following along with this tutorial. You can find the complete code for this tutorial in my GitHub repository.

Don’t forget to subscribe to my YouTube channel for more tutorials like this. See you in the next tutorial!

[ad_2]

Source link