[ad_1]

Modern big language designs (LLMs) for diverse NLP jobs have created remarkable strides, with notable illustrations being GPT-3, PaLM, LLaMA, ChatGPT, and the additional recently proposed GPT-4. These products have great guarantee for organizing and generating choices related to individuals due to the fact they can resolve several responsibilities in zero-shot scenarios or with the aid of a several situations. Emergent skills, like in-context understanding, mathematical reasoning, and widespread feeling wondering, are proven by LLMs. Nevertheless, LLMs have created-in constraints, these kinds of as the inability to use external tools, access latest data, or rationale mathematically with precision.

An ongoing investigate place focuses on improving language types with entry to outdoors instruments and sources and investigating the integration of outdoor equipment and plug-and-perform modular approaches to solve these constraints of LLMs. Current exploration makes use of LLMs to construct complicated applications that a lot more effectively complete reasonable reasoning difficulties and leverage strong computer system assets to make improvements to mathematical reasoning skills. For occasion, with the support of exterior awareness resources and on-line search engines, LLMs can acquire real-time information and use domain-particular expertise. A further present line of investigate, such as ViperGPT, Visible ChatGPT, VisProg, and HuggingGPT, integrates many basic pc eyesight products to give LLMs the abilities needed to manage visible reasoning issues.

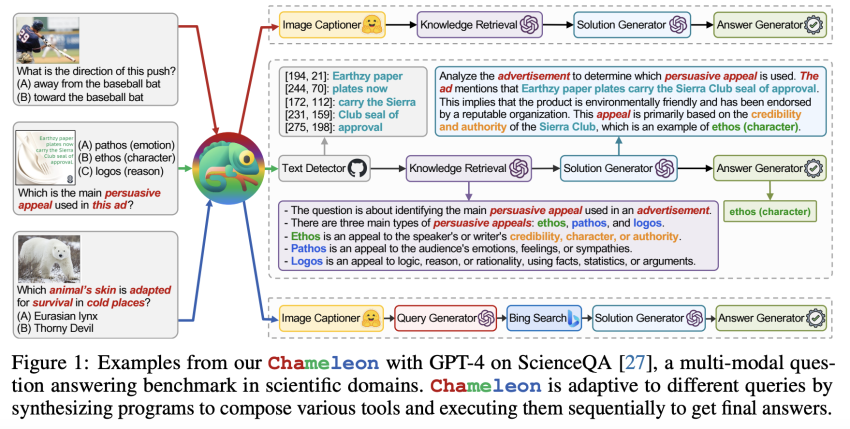

Inspite of substantial enhancements, today’s instrument-augmented LLMs nevertheless face important road blocks though responding to real-earth inquiries. Most latest methods are restricted to a slim set of resources or count on individual equipment for a offered domain, building it tough to generalize them to different inquiries. Determine 1 illustrates this: “Which is the key persuasive attractiveness applied in this advertisement?” 1) Think that an ad photograph has textual content context and contact a textual content decoder to understand the semantics to reply to this query 2) obtain qualifications details to make clear what “persuasive appeal” is and how unique types vary 3) arrive up with a answer employing the hints from the input question and the interim results from before phases and 4) lastly, existing the reaction in a job-specific fashion.

On the other hand, though responding to the question “Which animal’s pores and skin is tailored for survival in chilly destinations,” a person may require to get hold of added modules, this sort of as an picture captioner to parse image information and facts and a world-wide-web search engine to acquire domain awareness to grasp scientific terminology. Researchers from UCLA and Microsoft Investigation present Chameleon, a plug-and-engage in compositional reasoning framework that makes use of large language designs to remedy these problems. Chameleon can synthesize packages to create a variety of applications to reply a number of inquiries.

Chameleon is a pure language planner that builds upon an LLM. Contrary to traditional strategies, it employs a variety of instruments, these as LLMs, pre-built computer eyesight models, on the web lookup engines, Python features, and rule-based mostly modules developed for a individual target. Chameleon generates these plans making use of the in-context discovering capabilities of LLMs and does not will need any instruction. The planner can deduce the proper buy of resources to compose and operate to present the last reaction to a consumer inquiry, prompted by descriptions of every single software and examples of software use.

Chameleon makes systems that resemble pure language, in contrast to previously endeavours that designed domain-distinct systems. These applications are much less mistake-prone, simpler to debug, more consumer-pleasant for people with minor programming expertise, and expandable to consist of new modules. Each individual module in the system executes, processes, and caches the query and context, returns a reaction picked by the module, and modifies the query and saved context for approaching module executions. By composing modules as a sequential program, updated queries and beforehand cached context may possibly be used all over the execution of the future modules. On two tasks—ScienceQA and TabMWP—they display Chameleon’s overall flexibility and efficiency.

TabMWP is a mathematics benchmark together with several tabular contexts, whilst ScienceQA is a multi-modal question-answering benchmark encompassing several context formats and scientific themes. The effectiveness of Chameleon’s capacity to coordinate different resources across many varieties and domains may well be tested employing these two benchmarks. Notably, Chameleon with GPT-4 obtains an accuracy of 86.54% on ScienceQA, outperforming the ideal-documented few-shot design by a component of 11.37%. Chameleon provides an enhancement of 7.97% over CoT GPT-4 and a 17.8% boost over the condition-of-the-artwork product on TabMWP making use of GPT-4 as the fundamental LLM, ensuing in a 98.78% whole accuracy.

In contrast to former LLMs like ChatGPT, further more exploration indicates that employing GPT-4 as a planner demonstrates additional consistent and reasonable instrument variety and can deduce possible limitations offered the recommendations. Their short contributions are as follows: (1) They generate Chameleon, a plug-and-engage in compositional reasoning framework, to fix the inherent restrictions of enormous language types and choose on different reasoning duties. (2) They successfully mix several systems, which include LLMs, professional eyesight products, on-line lookup engines, Python capabilities, and rule-primarily based modules, to generate a adaptable and adaptive AI system to reply to real-earth inquiries. (3) They considerably progress the state of the art by demonstrating the framework’s adaptability and efficacy on two -benchmarks, ScienceQA and TabMWP. The codebase is publicly offered on GitHub.

Check out out the Paper, Task, and Github. Don’t neglect to join our 19k+ ML SubReddit, Discord Channel, and E-mail Newsletter, where by we share the latest AI investigate news, interesting AI jobs, and more. If you have any inquiries pertaining to the above posting or if we skipped just about anything, feel free of charge to e mail us at [email protected]

🚀 Test Out 100’s AI Equipment in AI Resources Club

Aneesh Tickoo is a consulting intern at MarktechPost. He is now pursuing his undergraduate diploma in Details Science and Synthetic Intelligence from the Indian Institute of Technologies(IIT), Bhilai. He spends most of his time performing on tasks aimed at harnessing the electrical power of machine studying. His research desire is impression processing and is passionate about building options all over it. He enjoys to join with folks and collaborate on appealing initiatives.

[ad_2]

Source hyperlink