[ad_1]

Normal Language Processing, or NLP, is one of the most intriguing fields in the at any time-escalating environment of synthetic intelligence and machine finding out. Latest technological breakthroughs in the industry of NLP have offered increase to several remarkable designs employed in chat services, digital assistants, language translators, and many others., across many sectors. The most notable illustration of this is OpenAI’s conversational dialogue agent, ChatGPT, which has not long ago taken the world by storm. The OpenAI chatbot attained around a million consumers inside five times of its inception for the reason that of its astonishing means to generate insightful and flexible human-like responses to consumer inquiries originating from a variety of fields. Having said that, there are sure shortcomings when it comes to totally accessing these type of exceptional models. Most of these products can only be accessed through many APIs, which are routinely constrained in terms of price tag, utilization constraints, and other technological limitations. This normally stops researchers and builders from acknowledging their comprehensive probable and slows down investigate and improvement in the NLP sector. Moreover, refining and increasing these kinds of designs phone calls for substantial, large-high quality chat corpora, which are regularly confined in amount and not typically publicly available.

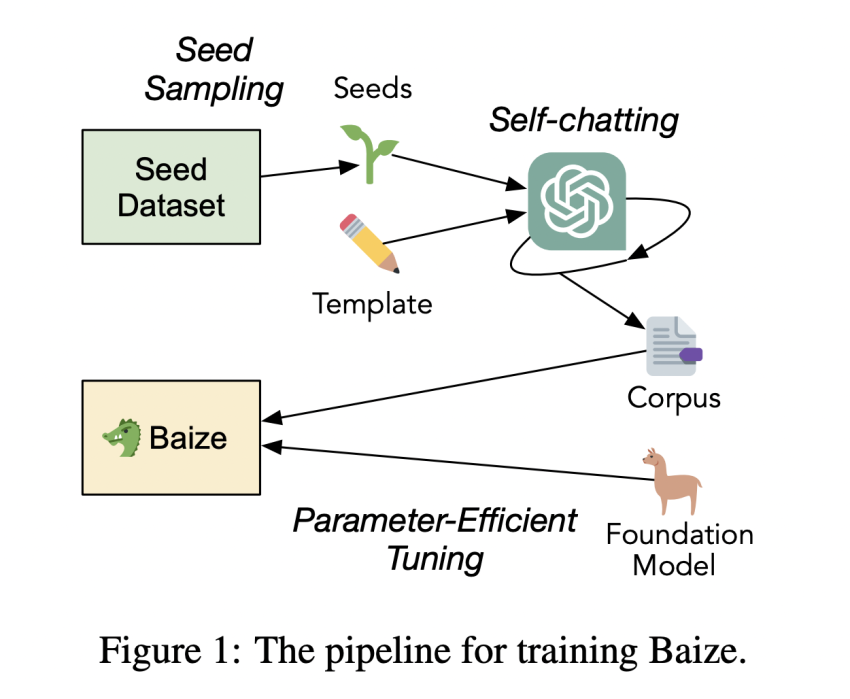

In reaction to this challenge assertion, a team of scientists from the University of California, San Diego, and Solar Yat-sen University, China, in collaboration with Microsoft Research, have formulated a novel pipeline architecture that takes advantage of ChatGPT to have interaction in a dialogue with alone in purchase to routinely produce a higher-excellent multi-convert chat corpus. What’s more, the team’s study also focuses on employing a parameter-efficient tuning strategy to enhance large language versions with constrained computational resources. Working with their generated chat corpus, the team of researchers good-tuned Meta’s open-source big language design, LLaMA, resulting in a new product referred to as Baize. This open up-source chat design has extraordinary functionality and can function with just one particular GPU, producing it a practical choice for a lot of scientists with computational limits.

In purchase to formulate the information assortment pipeline for building a multi-switch chat corpus, the scientists leveraged ChatGPT, which internally makes use of the GPT-3.5-Turbo product. The researchers employed a method recognised as self-chatting by enabling ChatGPT to interact in a dialogue with by itself to simulate equally human and AI responses. On this front, the researchers utilised a template for the dialogue structure and needs, therefore, enabling the API to generate transcripts for each sides continually. The template consists of a “seed,” which is essentially a issue or a phrase that dictates the matter of the conversation. The researchers went on to demonstrate that seeds from area-specific datasets can be utilized to greatly enhance a conversation-centered design on a particular topic. Baize leverages over 111k dialogues produced from ChaptGPT and an additional 47k dialogue exchanges based mostly in the healthcare area. This pipeline was crucial in furnishing the groundwork for developing corpora that can be used to fantastic-tune LLaMA for creating Baize, therefore enhancing the effectiveness precision in multi-change dialogues.

The upcoming phase was to tune Baize working with a parameter-helpful tuning system. Prior experiments have proven that typical great-tuning necessitates enormous computational methods and enormous high-high-quality datasets. Nevertheless, not all scientists have accessibility to limitless computational methods, and the the greater part of these corpora are not publicly accessible. Parameter-efficient tuning is helpful in this condition. With the aid of these kinds of variety of great-tuning, point out-of-the-artwork language versions can be modified to be applied with negligible assets devoid of affecting their efficiency. The researchers employed the Reduced-Rank Adaption (LoRA) tactic to all levels of the LLaMA model in purchase to boost its performance by rising the variety of tunable parameters and adaption abilities.

The researchers at first regarded as utilizing OpenAI’s GPT-4 design to evaluate their product. First study, nonetheless, showed that the GPT-4 model prefers lengthy responses even when they are uninformative, rendering it unsuitable for analysis. As a consequence, researchers are currently searching into the chance of human evaluation. The effects from the human analysis will also be bundled in the forthcoming revisions of their analysis paper. At this time, the Baize product is offered in 7B, 13B, and 30B parameters, and the 60B design variation will also be produced before long. An online demo of the product can also be accessed below. The researchers also extra that the Baize design and knowledge are to be utilized for analysis uses only. Its commercial use is strictly prohibited as its mother or father product, LLaMA, has a non-commercial license. To further more enhance the general performance of their versions, the scientists are looking at how to incorporate reinforcement discovering into their function in the potential.

The team’s reproducible pipeline for instantly making a multi-change chat corpus and exceptional open up-source chat product referred to as Baize can be applied to summarize their important contributions. The team strongly hopes that their operate encourages the group to progress further research and faucet into earlier unexplored territories when it comes to NLP investigation.

Check out the Paper, Repo and Demo. All Credit rating For This Study Goes To the Scientists on This Venture. Also, don’t neglect to join our 17k+ ML SubReddit, Discord Channel, and E mail E-newsletter, the place we share the newest AI study news, awesome AI jobs, and extra.

Khushboo Gupta is a consulting intern at MarktechPost. She is at present pursuing her B.Tech from the Indian Institute of Technological innovation(IIT), Goa. She is passionate about the fields of Device Studying, Purely natural Language Processing and World-wide-web Growth. She enjoys understanding far more about the technological industry by participating in a number of worries.

[ad_2]

Resource url