[ad_1]

NVIDIA now launched a wave of chopping-edge AI analysis that will empower developers and artists to deliver their ideas to lifetime — whether or not still or going, in 2D or 3D, hyperrealistic or fantastical.

About 20 NVIDIA Analysis papers advancing generative AI and neural graphics — together with collaborations with around a dozen universities in the U.S., Europe and Israel — are headed to SIGGRAPH 2023, the leading laptop or computer graphics convention, having spot Aug. 6-10 in Los Angeles.

The papers include generative AI versions that switch textual content into customized images inverse rendering applications that rework continue to photographs into 3D objects neural physics products that use AI to simulate advanced 3D elements with amazing realism and neural rendering styles that unlock new abilities for building serious-time, AI-driven visual facts.

Improvements by NVIDIA researchers are often shared with developers on GitHub and included into solutions, which includes the NVIDIA Omniverse platform for developing and operating metaverse applications and NVIDIA Picasso, a just lately announced foundry for customized generative AI models for visual design. Decades of NVIDIA graphics investigate served convey film-type rendering to video games, like the recently unveiled Cyberpunk 2077 Ray Tracing: Overdrive Method, the world’s very first route-traced AAA title.

The investigate breakthroughs offered this 12 months at SIGGRAPH will enable builders and enterprises fast crank out artificial info to populate virtual worlds for robotics and autonomous vehicle teaching. They’ll also allow creators in artwork, architecture, graphic layout, game growth and movie to far more promptly make large-excellent visuals for storyboarding, previsualization and even production.

AI With a Personal Contact: Custom-made Text-to-Impression Styles

Generative AI styles that transform text into photos are highly effective equipment to build thought artwork or storyboards for films, online video games and 3D digital worlds. Text-to-picture AI instruments can turn a prompt like “children’s toys” into practically infinite visuals a creator can use for inspiration — producing visuals of stuffed animals, blocks or puzzles.

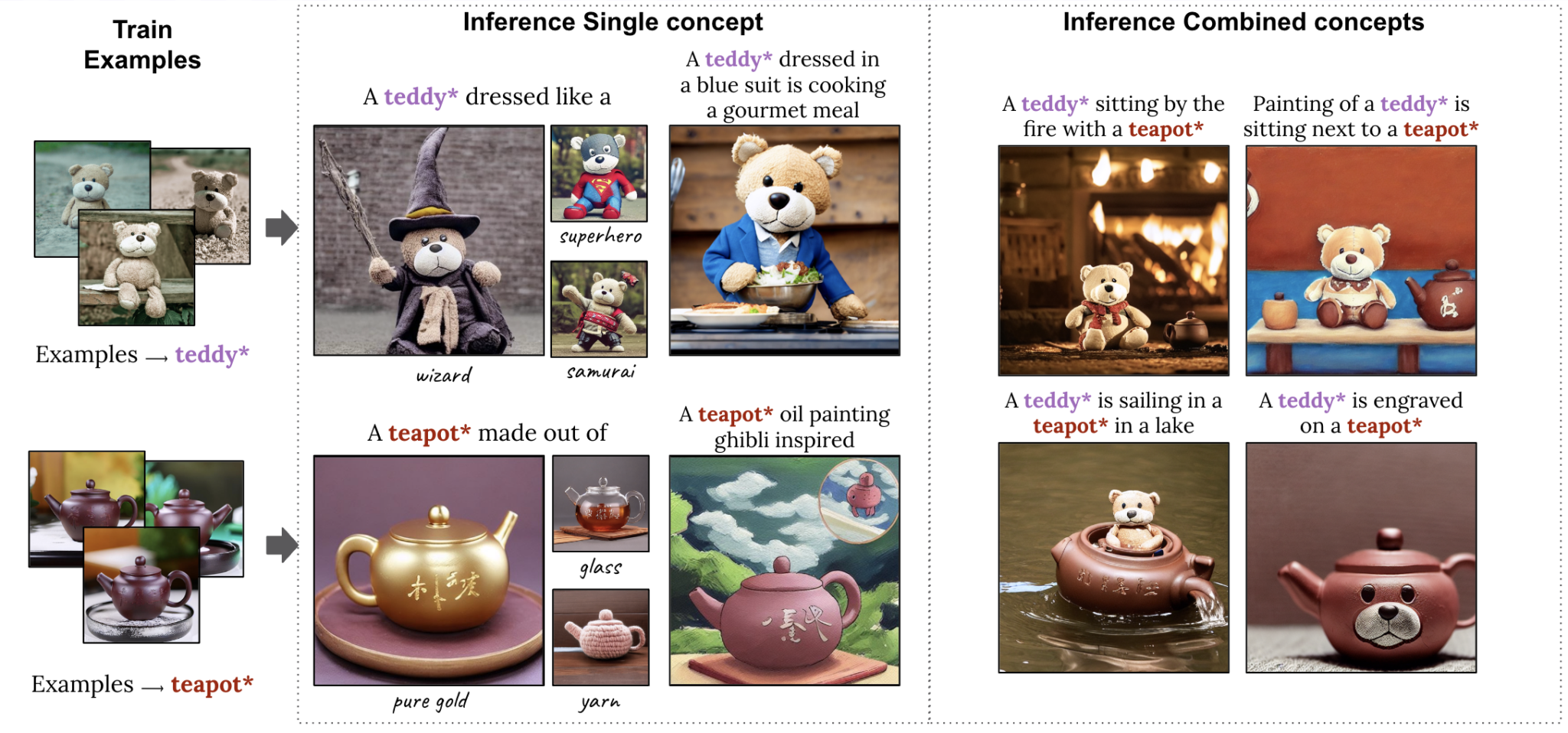

Even so, artists may possibly have a unique matter in thoughts. A imaginative director for a toy manufacturer, for example, could be arranging an advertisement campaign around a new teddy bear and want to visualize the toy in various situations, these as a teddy bear tea get together. To permit this degree of specificity in the output of a generative AI design, researchers from Tel Aviv University and NVIDIA have two SIGGRAPH papers that allow people to provide picture examples that the model immediately learns from.

One particular paper describes a procedure that requires a solitary illustration picture to customise its output, accelerating the personalization method from minutes to approximately 11 seconds on a solitary NVIDIA A100 Tensor Main GPU, more than 60x more rapidly than earlier personalization techniques.

A 2nd paper introduces a remarkably compact model known as Perfusion, which normally takes a handful of strategy illustrations or photos to make it possible for customers to mix various personalized elements — these types of as a distinct teddy bear and teapot — into a solitary AI-created visual:

Serving in 3D: Developments in Inverse Rendering and Character Creation

After a creator comes up with strategy artwork for a virtual planet, the following step is to render the natural environment and populate it with 3D objects and characters. NVIDIA Analysis is inventing AI strategies to speed up this time-consuming approach by quickly transforming 2D photographs and video clips into 3D representations that creators can import into graphics programs for even more enhancing.

A 3rd paper created with researchers at the University of California, San Diego, discusses tech that can produce and render a photorealistic 3D head-and-shoulders design centered on a one 2D portrait — a major breakthrough that can make 3D avatar development and 3D video conferencing available with AI. The process operates in real time on a client desktop, and can crank out a photorealistic or stylized 3D telepresence working with only conventional webcams or smartphone cameras.

A fourth task, a collaboration with Stanford College, brings lifelike motion to 3D figures. The researchers produced an AI process that can study a selection of tennis capabilities from 2D movie recordings of genuine tennis matches and use this motion to 3D characters. The simulated tennis gamers can properly hit the ball to goal positions on a digital court, and even enjoy extended rallies with other characters.

Beyond the exam case of tennis, this SIGGRAPH paper addresses the challenging problem of developing 3D people that can complete diverse competencies with real looking motion — with out the use of costly movement-capture knowledge.

Not a Hair Out of Put: Neural Physics Enables Real looking Simulations

The moment a 3D character is created, artists can layer in real looking specifics such as hair — a sophisticated, computationally highly-priced challenge for animators.

Humans have an normal of 100,000 hairs on their heads, with each individual reacting dynamically to an individual’s motion and the encompassing setting. Traditionally, creators have utilised physics formulas to compute hair movement, simplifying or approximating its movement dependent on the sources out there. That’s why digital people in a huge-spending budget movie activity significantly additional specific heads of hair than true-time movie video game avatars.

A fifth paper showcases a system that can simulate tens of 1000’s of hairs in large resolution and in authentic time making use of neural physics, an AI strategy that teaches a neural community to predict how an item would transfer in the serious environment.

https://www.youtube.com/look at?v=ehesid36d4E

The team’s novel solution for accurate simulation of complete-scale hair is specifically optimized for modern-day GPUs. It features major efficiency leaps in comparison to state-of-the-art, CPU-based solvers, decreasing simulation occasions from various times to just several hours — even though also boosting the quality of hair simulations achievable in real time. This strategy at last allows both exact and interactive bodily based hair grooming.

Neural Rendering Brings Movie-Top quality Depth to Genuine-Time Graphics

After an natural environment is crammed with animated 3D objects and characters, real-time rendering simulates the physics of light-weight reflecting through the digital scene. Latest NVIDIA investigation shows how AI products for textures, materials and volumes can deliver movie-quality, photorealistic visuals in actual time for video video games and digital twins.

NVIDIA invented programmable shading more than two many years ago, enabling developers to customize the graphics pipeline. In these newest neural rendering innovations, scientists extend programmable shading code with AI models that run deep within NVIDIA’s real-time graphics pipelines.

In a sixth SIGGRAPH paper, NVIDIA will present neural texture compression that delivers up to 16x more texture detail without having getting extra GPU memory. Neural texture compression can significantly improve the realism of 3D scenes, as witnessed in the graphic under, which demonstrates how neural-compressed textures (suitable) capture sharper detail than prior formats, wherever the text stays blurry (centre).

A connected paper introduced previous year is now available in early entry as NeuralVDB, an AI-enabled facts compression approach that decreases by 100x the memory desired to characterize volumetric details — like smoke, fire, clouds and h2o.

NVIDIA also introduced right now extra aspects about neural components research that was revealed in the most new NVIDIA GTC keynote. The paper describes an AI method that learns how light-weight displays from photoreal, lots of-layered components, lessening the complexity of these belongings down to little neural networks that operate in actual time, enabling up to 10x a lot quicker shading.

The degree of realism can be observed in this neural-rendered teapot, which properly represents the ceramic, the imperfect obvious-coat glaze, fingerprints, smudges and even dust.

A lot more Generative AI and Graphics Investigation

These are just the highlights — read through extra about all the NVIDIA papers at SIGGRAPH. NVIDIA will also existing 6 classes, four talks and two Emerging Technological innovation demos at the convention, with subjects which includes path tracing, telepresence and diffusion models for generative AI.

NVIDIA Study has hundreds of researchers and engineers worldwide, with teams centered on topics which include AI, pc graphics, personal computer eyesight, self-driving cars and robotics.

[ad_2]

Source website link