[ad_1]

Educating LLM from scratch is tough because of the comprehensive time required to fully grasp why fine-tuned models fall short iteration cycles for high-quality-tuning on compact datasets are typically measured in months. In contrast, the tuning iterations for a prompt just take area in seconds, but immediately after a handful of hours, efficiency ranges off. The gigabytes of data in a warehouse cannot be squeezed into the prompt’s area.

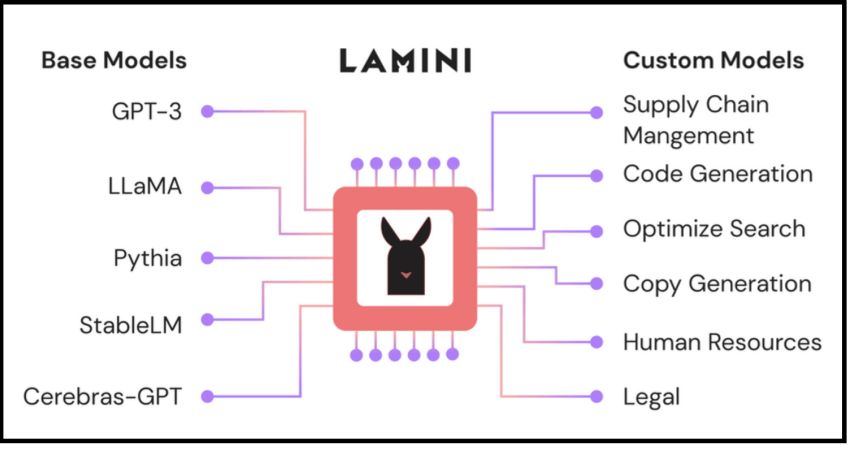

Working with only a handful of traces of code from the Lamini library, any developer, not just these experienced in machine discovering, can educate high-executing LLMs that are on par with ChatGPT on massive datasets. Launched by Lamini.ai, this library’s optimizations go over and above what programmers at this time can entry and incorporate advanced tactics like RLHF and uncomplicated kinds like hallucination suppression. From OpenAI’s designs to open up-source ones on HuggingFace, Lamini tends to make executing various foundation product comparisons with a solitary line of code very simple.

Methods for establishing your LLM:

- Lamini is a library that allows for high-quality-tuned prompts and text outputs.

- Quick good-tuning and RLHF employing the effective Lamini library

- This is the initial hosted facts generator permitted for industrial utilization precisely to create facts necessary to coach instruction-next LLMs.

- No cost and open-supply LLM that can abide by guidelines making use of the previously mentioned application with small programming effort and hard work.

The foundation models’ comprehension of English is adequate for buyer use scenarios. On the other hand, when educating them your industry’s jargon and requirements, prompt tuning is not normally ample, and end users will want to build their own LLM.

LLM can deal with consumer conditions like ChatGPT by adhering to these techniques:

- Using ChatGPT’s prompt adjustment or yet another model as an alternative. The team optimized the most effective achievable prompt for straightforward use. Rapidly prompt-tune in between models with the Lamini library’s APIs change amongst OpenAI and open-source types with a solitary line of code.

- Develop a significant volume of input-output facts. These will reveal how it should react to the info it gets, whether in English or JSON. The crew unveiled a repository with a couple of lines of code that works by using the Lamini library to create 50k information points from as several as 100. The repository includes a publicly accessible 50k dataset.

- Modifying a commencing product employing your extensive data. In addition to the data generator, they also share a Lamini-tuned LLM skilled on the synthetic facts.

- Putting finely adjusted model by way of RLHF. Lamini eliminates the requirement for a sizable device discovering (ML) and human labeling (HL) employees to work RLHF.

- Put it in the cloud. Simply invoke the API’s endpoint in your software.

Right after schooling the Pythia simple design with 37k created directions (immediately after filtering 70k), they have introduced an open-supply instruction-following LLM. Lamini provides all the positive aspects of RLHF and great-tuning with out the stress of the former. Soon, it will be in cost of the total procedure.

The crew is psyched to simplify the training approach for engineering groups and appreciably increase the performance of LLMs. They hope that a lot more individuals will be capable to assemble these models beyond tinkering with prompts if iteration cycles can be built faster and much more successful.

Examine out the Web site and Instrument. Really do not forget about to join our 20k+ ML SubReddit, Discord Channel, and E-mail Publication, where we share the most current AI research news, neat AI assignments, and much more. If you have any issues with regards to the above posting or if we skipped nearly anything, really feel no cost to e mail us at [email protected]

🚀 Verify Out 100’s AI Tools in AI Instruments Club

Tanushree Shenwai is a consulting intern at MarktechPost. She is currently pursuing her B.Tech from the Indian Institute of Technologies(IIT), Bhubaneswar. She is a Knowledge Science enthusiast and has a keen fascination in the scope of software of synthetic intelligence in various fields. She is passionate about discovering the new enhancements in technologies and their actual-daily life software.

[ad_2]

Supply hyperlink