[ad_1]

3D scene modeling has traditionally been a time-consuming treatment reserved for persons with domain skills. Despite the fact that a sizable collection of 3D elements is out there in the public domain, it is unheard of to discover a 3D scene that matches the user’s necessities. For the reason that of this, 3D designers at times commit hrs or even times to modeling person 3D objects and assembling them into a scene. Building 3D creation clear-cut even though preserving handle over its factors would enable near the hole in between experienced 3D designers and the basic public (e.g., sizing and placement of personal objects).

The accessibility of 3D scene modeling has not long ago improved for the reason that of performing on 3D generative styles. Promising success for 3D object synthesis have been obtained making use of 3Daware generative adversarial networks (GANs), indicating a 1st phase towards combining established products into scenes. GANs, on the other hand, are specialized to a one merchandise class, which restricts the variety of results and can make scene-degree text-to-3D conversion challenging. In distinction, textual content-to-3D technology employing diffusion products lets customers to urge the development of 3D objects from a broad assortment of types.

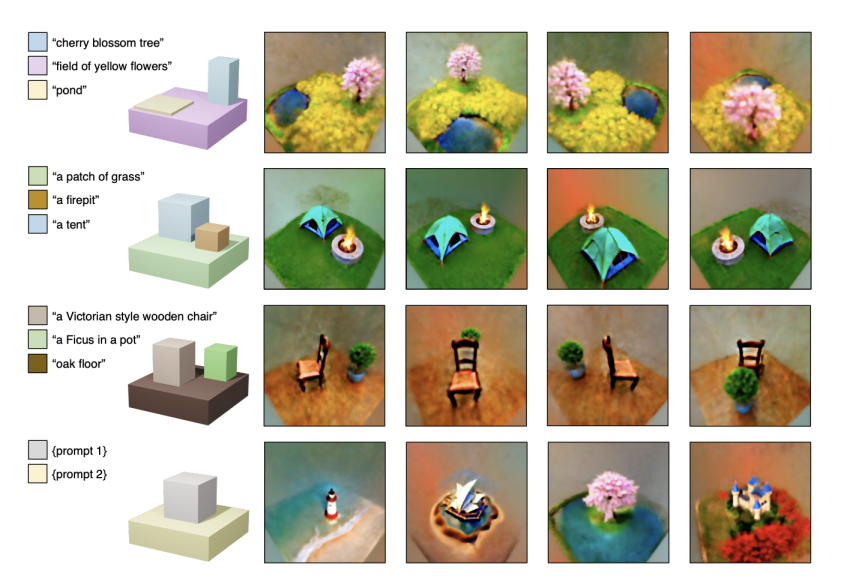

Recent exploration utilizes a one-term prompt to impose world conditioning on rendered views of a differentiable scene representation, utilizing strong 2D picture diffusion priors learned on online-scale information. These tactics may develop excellent item-centric generations, but they require assist to produce scenes with quite a few unique capabilities. Worldwide conditioning even more restricts controllability considering the fact that person input is minimal to a solitary text prompt, and there is no way to affect the structure of the produced scene. Researchers from Stanford present a procedure for compositional text-to-image output employing diffusion types termed locally conditioned diffusion.

Their recommended procedure builds cohesive 3D sets with control more than the measurement and positioning of unique objects while employing text prompts and 3D bounding bins as input. Their approach applies conditional diffusion phases selectively to particular sections of the picture using an enter segmentation mask and matching textual content prompts, producing outputs that observe the consumer-specified composition. By incorporating their strategy into a textual content-to-3D making pipeline based on rating distillation sampling, they can also build compositional text-to-3D scenes.

They exclusively deliver the subsequent contributions:

• They current domestically conditioned diffusion, a procedure that offers 2D diffusion versions a lot more compositional flexibility.

• They suggest crucial digital camera pose sampling methodologies, critical for a compositional 3D era.

• They introduce a process for compositional 3D synthesis by adding regionally conditioned diffusion to a rating distillation sampling-dependent 3D making pipeline.

Verify out the Paper and Venture. All Credit score For This Investigate Goes To the Researchers on This Job. Also, don’t fail to remember to join our 16k+ ML SubReddit, Discord Channel, and Electronic mail Newsletter, in which we share the most current AI investigate news, cool AI tasks, and extra.

Aneesh Tickoo is a consulting intern at MarktechPost. He is currently pursuing his undergraduate degree in Details Science and Synthetic Intelligence from the Indian Institute of Engineering(IIT), Bhilai. He spends most of his time doing work on jobs aimed at harnessing the electric power of equipment discovering. His exploration curiosity is impression processing and is passionate about creating options about it. He enjoys to join with persons and collaborate on exciting initiatives.

[ad_2]

Supply url