[ad_1]

Google sees AI as a foundational and transformational technologies, with latest developments in generative AI technologies, this kind of as LaMDA, PaLM, Imagen, Parti, MusicLM, and very similar machine mastering (ML) models, some of which are now remaining included into our products. This transformative probable needs us to be responsible not only in how we progress our technological innovation, but also in how we envision which systems to establish, and how we evaluate the social effect AI and ML-enabled systems have on the environment. This endeavor necessitates fundamental and utilized investigation with an interdisciplinary lens that engages with — and accounts for — the social, cultural, financial, and other contextual dimensions that shape the progress and deployment of AI programs. We need to also fully grasp the variety of attainable impacts that ongoing use of this kind of systems may perhaps have on susceptible communities and broader social units.

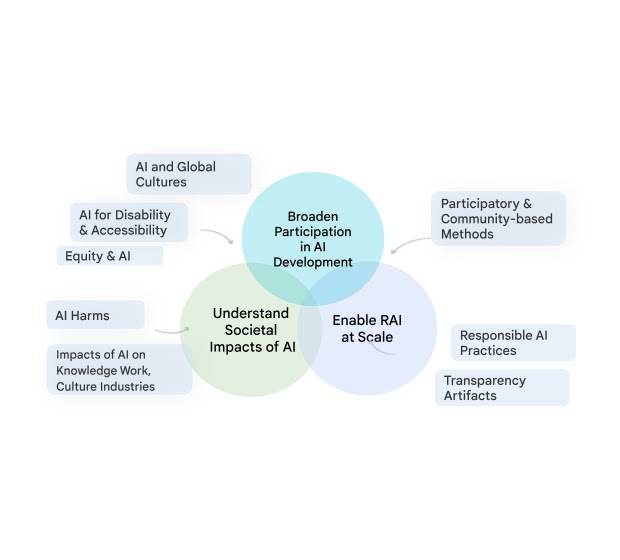

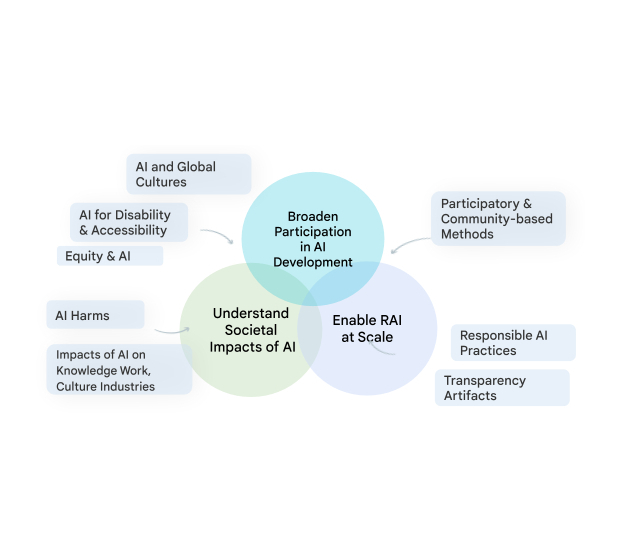

Our team, Engineering, AI, Modern society, and Society (TASC), is addressing this vital will need. Exploration on the societal impacts of AI is elaborate and multi-faceted no one particular disciplinary or methodological viewpoint can by yourself supply the diverse insights desired to grapple with the social and cultural implications of ML systems. TASC hence leverages the strengths of an interdisciplinary staff, with backgrounds ranging from computer science to social science, digital media and city science. We use a multi-strategy method with qualitative, quantitative, and blended strategies to critically examine and condition the social and technological procedures that underpin and surround AI technologies. We emphasis on participatory, culturally-inclusive, and intersectional equity-oriented study that brings to the foreground impacted communities. Our get the job done advancements Responsible AI (RAI) in regions these as laptop or computer eyesight, purely natural language processing, health, and standard objective ML products and purposes. Beneath, we share examples of our solution to Responsible AI and the place we are headed in 2023.

Concept 1: Tradition, communities, & AI

1 of our crucial parts of investigation is the development of solutions to make generative AI technologies far more inclusive of and precious to persons globally, through local community-engaged, and culturally-inclusive methods. Towards this intention, we see communities as gurus in their context, recognizing their deep information of how systems can and need to influence their have life. Our study champions the importance of embedding cross-cultural factors through the ML progress pipeline. Neighborhood engagement permits us to change how we include awareness of what’s most vital all through this pipeline, from dataset curation to analysis. This also enables us to recognize and account for the methods in which technologies fall short and how precise communities may possibly expertise hurt. Primarily based on this understanding we have designed liable AI analysis techniques that are productive in recognizing and mitigating biases together many dimensions.

Our function in this area is important to making certain that Google’s systems are safe and sound for, do the job for, and are handy to a numerous established of stakeholders all around the world. For illustration, our analysis on person attitudes towards AI, responsible conversation layout, and fairness evaluations with a aim on the world south demonstrated the cross-cultural differences in the affect of AI and contributed resources that help culturally-positioned evaluations. We are also building cross-disciplinary study communities to study the marriage concerning AI, culture, and society, through our modern and upcoming workshops on Cultures in AI/AI in Lifestyle, Ethical Criteria in Inventive Applications of Personal computer Vision, and Cross-Cultural Things to consider in NLP.

Our new research has also sought out views of particular communities who are regarded to be less represented in ML improvement and applications. For example, we have investigated gender bias, both in natural language and in contexts these kinds of as gender-inclusive health and fitness, drawing on our investigate to acquire additional exact evaluations of bias so that anyone creating these systems can discover and mitigate harms for folks with queer and non-binary identities.

Theme 2: Enabling Accountable AI in the course of the development lifecycle

We get the job done to help RAI at scale, by developing industry-extensive finest methods for RAI across the growth pipeline, and ensuring our systems verifiably include that finest exercise by default. This applied exploration contains liable knowledge generation and evaluation for ML development, and systematically advancing instruments and tactics that assistance practitioners in assembly essential RAI aims like transparency, fairness, and accountability. Extending earlier work on Data Playing cards, Model Cards and the Product Card Toolkit, we released the Information Cards Playbook, furnishing builders with solutions and resources to document proper utilizes and essential information associated to a dataset. Since ML models are generally qualified and evaluated on human-annotated information, we also advance human-centric research on data annotation. We have developed frameworks to document annotation processes and approaches to account for rater disagreement and rater diversity. These approaches allow ML practitioners to superior be certain range in annotation of datasets utilized to train types, by figuring out latest obstacles and re-envisioning information work procedures.

Long term instructions

We are now doing the job to further broaden participation in ML model improvement, through methods that embed a diversity of cultural contexts and voices into technology design and style, enhancement, and impression evaluation to guarantee that AI achieves societal plans. We are also redefining responsible practices that can cope with the scale at which ML systems function in today’s world. For example, we are building frameworks and constructions that can allow group engagement within just market AI research and enhancement, which includes neighborhood-centered analysis frameworks, benchmarks, and dataset curation and sharing.

In distinct, we are furthering our prior perform on being familiar with how NLP language designs may perhaps perpetuate bias towards individuals with disabilities, extending this analysis to address other marginalized communities and cultures and together with impression, video clip, and other multimodal styles. These versions may well consist of tropes and stereotypes about certain groups or might erase the ordeals of particular persons or communities. Our efforts to identify resources of bias inside of ML styles will lead to improved detection of these representational harms and will assistance the development of far more good and inclusive methods.

TASC is about finding out all the touchpoints between AI and men and women — from folks and communities, to cultures and modern society. For AI to be culturally-inclusive, equitable, obtainable, and reflective of the demands of impacted communities, we will have to get on these challenges with inter- and multidisciplinary study that facilities the wants of impacted communities. Our research reports will carry on to discover the interactions in between society and AI, furthering the discovery of new means to create and evaluate AI in get for us to build a lot more sturdy and culturally-situated AI systems.

Acknowledgements

We would like to thank every person on the team that contributed to this site post. In alphabetical buy by previous identify: Cynthia Bennett, Eric Corbett, Aida Mostafazadeh Davani, Emily Denton, Sunipa Dev, Fernando Diaz, Mark Díaz, Shaun Kane, Shivani Kapania, Michael Madaio, Vinodkumar Prabhakaran, Rida Qadri, Renee Shelby, Ding Wang, and Andrew Zaldivar. Also, we would like to thank Toju Duke and Marian Croak for their precious suggestions and suggestions.

[ad_2]

Supply backlink