[ad_1]

ChatGPT is trending, and tens of millions of people are applying it each individual day. With its amazing capabilities of imitating individuals, these kinds of as question answering, producing one of a kind and innovative articles, summarizing enormous textual facts, code completion, and creating very handy virtual assistants, ChatGPT is building our life less difficult. Developed by OpenAI, ChatGPT is dependent on GPT 3.5 (Generative Pre-Trained Transformer) and GPT 4’s transformer architecture. GPT 4, the most recent edition of language styles produced by OpenAI, is multimodal in mother nature, i.e., it takes in enter in the variety of text and images, contrary to the earlier versions. Even other Significant Language Styles (LLMs) like PaLM, LLaMA, and BERT are becoming made use of in purposes of a variety of domains involving healthcare, E-commerce, finance, training, and so forth.

A team of scientists has highlighted the change in between the outstanding overall performance of LLMs like GPT on advanced duties and their struggles with straightforward duties in a not long ago produced study paper. Diving into the limitations and capabilities of Transformer LLMs, the workforce has conducted experiments on 3 consultant compositional jobs: multi-digit multiplication, logic grid puzzles, and a common dynamic programming difficulty. These tasks include breaking down complications into lesser ways and combining those measures to make an specific remedy.

With the intention of finding out the boundaries of Transformers in resolving compositional tasks that require multi-phase reasoning, the authors have proposed two hypotheses. The 1st is that the Transformers achieve tasks by linearizing multi-stage reasoning into route matching, consequently relying on pattern-matching and shortcut learning alternatively than essentially comprehending and employing the fundamental computational guidelines required to acquire suitable methods. This approach allows speedy and correct predictions in identical designs throughout teaching but fails to generalize to unheard of sophisticated examples. The second speculation states that Transformers could have inherent restrictions even though striving to solve substantial-complexity compositional jobs possessing exceptional patterns. Early computational problems could possibly distribute and consequence in serious compounding faults in later methods, protecting against the types from arriving at the ideal remedy.

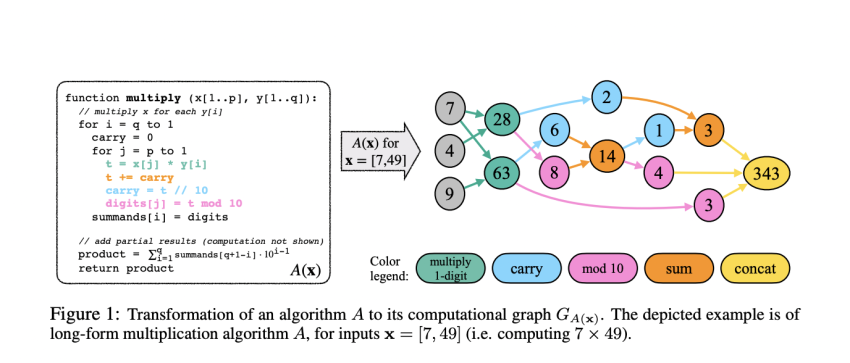

The authors have formulated the compositional responsibilities as computation graphs in get to examine the two hypotheses. These graphs decompose the approach of resolving troubles into smaller, far more workable submodular useful techniques, enabling structured steps of issue complexity and verbalization of computing actions as input sequences to language styles. They even use facts get to make predictions about the styles that designs would almost certainly master based on the underlying job distribution with out managing total computations in just the graph.

Primarily based on the empirical results, the authors have proposed that the Transformers manage compositional problems by minimizing multi-phase reasoning into linearized subgraph matching. They have provided theoretical arguments based mostly on abstract multi-step reasoning issues, which spotlight that as the activity complexity will increase, Transformers’ efficiency promptly deteriorates. This exhibits that the products may currently be constrained in their means to deal with compositional troubles of fantastic complexity.

In conclusion, the empirical and theoretical results imply that relatively than a thorough comprehension of the fundamental wondering processes, Transformers’ performance is generally pushed by pattern matching and subgraph matching, which also supports the concept that Transformers would locate it tough to do progressively difficult tasks.

Examine Out The Paper. Don’t fail to remember to join our 22k+ ML SubReddit, Discord Channel, and E mail E-newsletter, wherever we share the latest AI exploration news, interesting AI projects, and a lot more. If you have any queries about the previously mentioned article or if we skipped anything at all, sense cost-free to electronic mail us at [email protected]

🚀 Test Out 100’s AI Tools in AI Instruments Club

Tanya Malhotra is a remaining calendar year undergrad from the College of Petroleum & Electricity Reports, Dehradun, pursuing BTech in Laptop or computer Science Engineering with a specialization in Artificial Intelligence and Device Mastering.

She is a Facts Science enthusiast with fantastic analytical and significant wondering, together with an ardent desire in obtaining new abilities, leading groups, and taking care of function in an structured method.

[ad_2]

Source hyperlink