[ad_1]

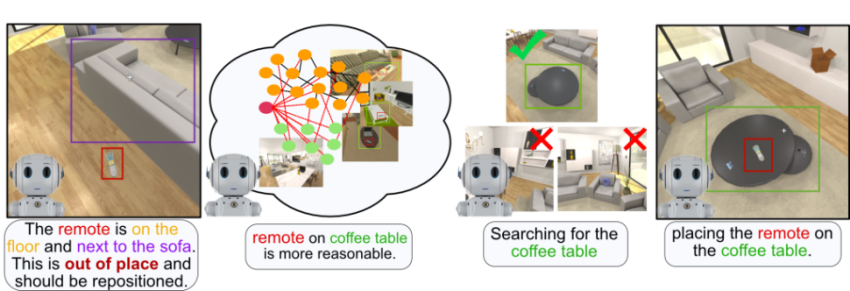

Illustration of embodied commonsense reasoning. A robotic proactively identifies a remote on the floor and is aware of it is out of put with out instruction. Then, the robotic figures out exactly where to area it in the scene and manipulates it there.

For robots to work correctly in the globe, they need to be more than express move-by-step instruction followers. Robots should consider steps in situations when there is a crystal clear violation of the standard instances and be ready to infer applicable context from partial instruction. Think about a scenario where a home robotic identifies a distant regulate which has fallen to the kitchen floor. The robot ought to not will need to wait right until a human instructs the robot to “pick the distant manage off the flooring and spot it on the espresso table”. As a substitute, the robot ought to comprehend that the distant on the floor is plainly out of position, and act to pick it up and area it in a acceptable area. Even if a human had been to location the distant handle 1st and instruct the agent to “put absent the remote that is on the dwelling place floor”, the robotic need to not have to have a next instruction for exactly where to place the remote, but alternatively infer from encounter that a affordable place would be, for case in point, on the coffee desk. After all, it would grow to be tiring for a property robot person to have to specify every drive in excruciating depth (believe about for just about every item you want the robot to go, specifying an instruction such as “pick up the shoes beneath the espresso table and spot them up coming to the doorway, aligned with the wall”).

The type of reasoning that would allow these kinds of partial or self-created instruction adhering to will involve a deep sense of how issues in the environment (objects, physics, other brokers, etc.) should to behave. Reasoning and acting of this variety are all areas of embodied commonsense reasoning and are vastly important for robots to act and interact seamlessly in the bodily environment.

There has been much work on embodied brokers that observe thorough action-by-step guidelines, but a lot less on embodied commonsense reasoning, the place the endeavor requires discovering how to perceive and act without explicit instruction. A single undertaking in which to study embodied commonsense reasoning is that of tidying up, where the agent need to determine objects which are out of their normal destinations and act in order provide the determined objects to plausible areas. This job brings together numerous appealing abilities of smart agents with commonsense reasoning of object placements. The agent should look for in probably locations for objects to be displaced, identify when objects are out of their all-natural locations in the context of the latest scene, and figure out where to reposition the objects so that they are in appropriate destinations – all even though intelligently navigating and manipulating.

In our latest do the job, we suggest TIDEE, an embodied agent that can tidy up by no means-in advance of-witnessed rooms with no any explicit instruction. TIDEE is the to start with of its kind for its skill to look for a scene for out of position objects, discover in which in the scene to reposition the out of place objects, and proficiently manipulate the objects to the discovered destinations. We’ll stroll via how TIDEE is able to do this in a afterwards segment, but initial let’s explain how we produce a dataset to educate and test our agent for the activity of tidying up.

Developing messy properties

To produce thoroughly clean and messy scenes for our agent to master from for what constitutes a tidy scene and what represent a messy scene, we use a simulation environment known as ai2thor. Ai2thor is an interactive 3D ecosystem of indoor scenes that enables objects to be picked up and moved all over. The simulator comes prepared with 120 scenes of kitchens, loos, dwelling rooms, and bedrooms with in excess of 116 item classes (and significantly much more item occasions) scattered during. Every single of the scenes arrives with a default initialization of item placements that are meticulously picked out by individuals to be really structured and “neat”. These default item areas make up our “tidy” scenes for furnishing our agent illustrations of objects in their normal areas. To create messy scenes, we implement forces to a subset of the objects with a random direction and magnitude (we “throw” the objects around) so they finish up in uncommon areas and poses. You can see under some illustrations of objects which have been moved out of place.

Upcoming, let us see how TIDEE learns from this dataset to be in a position to tidy up rooms.

How does TIDEE operate?

We give our agent a depth and RGB sensor to use for perceiving the scene. From this input, the agent ought to navigate all-around, detect objects, select them up, and spot them. The purpose of the tidying job is to rearrange a messy place back again to a tidy condition.

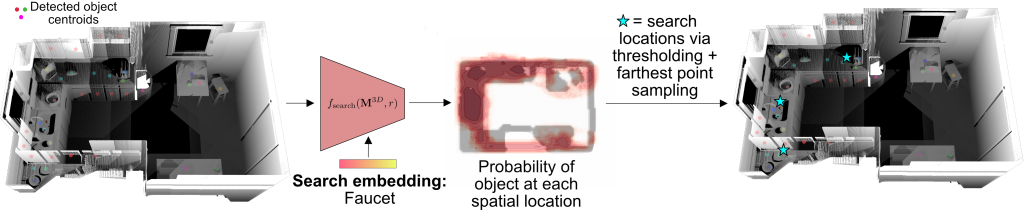

TIDEE tidies up rooms in a few phases. In the initially stage, TIDEE explores all over the area and operates an out of spot item detector at every time stage right up until one is determined. Then, TIDEE navigates in excess of to the item, and picks it up. In the second stage, TIDEE works by using graph inference in its joint external graph memory and scene graph to infer a plausible receptacle to place the item on in just the scene. It then explores the scene guided by a visual research network that implies exactly where the receptacle may perhaps be found if TIDEE has not determined it in a past time action. For navigation and retaining observe objects, TIDEE maintains a impediment map of the scene and merchants in memory the estimated 3D centroids of earlier detected objects.

The out of area detector uses visible and relational language options to decide if an item is in or out of put in the context of the scene. The visual capabilities for each and every object are obtained from an off-the-shelf item detector, and the relational language attributes are attained by providing predicted 3D relations of the objects (e.g. next to, supported by, previously mentioned, etcetera.) to a pretrained language product. We merge the visible and language functions to classify no matter whether every single detected item is in or out of place. We locate that combining the visible and relational modalities performs finest for out of location classification above applying a solitary modality.

To infer in which to position an object at the time it has picked up, TIDEE involves a neural graph module which is qualified to forecast plausible item placement proposals of objects. The modules will work by passing information and facts in between the object to be placed, a memory graph encoding plausible contextual relations from education scenes, and a scene graph encoding the item-relation configuration in the current scene. For our memory graph, we acquire inspiration from “Beyond Classes: The Visual Memex Model for Reasoning About Item Relationships” by Tomasz Malisiewicz and Alexei A. Efros (2009), which versions instance-stage item characteristics and their relations to supply more comprehensive visual appeal-centered context. Our memory graph consists of the tidy object cases in the training to deliver good-grain contextualization of tidy object placements. We display in the paper that this fantastic-grain visible and relational data is critical for TIDEE to area objects in human-preferred spots.

To look for for objects that have not been previously located, TIDEE works by using a visual research community that will take as input the semantic impediment map and a lookup category and predicts the probability of the item being existing at just about every spatial spot in the impediment map. The agent then queries in these very likely locations for the item of fascination.

Combining all the earlier mentioned modules provides us with a system to be in a position to detect out of place objects, infer exactly where they really should go, lookup intelligently, and navigate & manipulate properly. In the up coming segment, we’ll present you how very well our agent performs at tidying up rooms.

How great is TIDEE at tidying up?

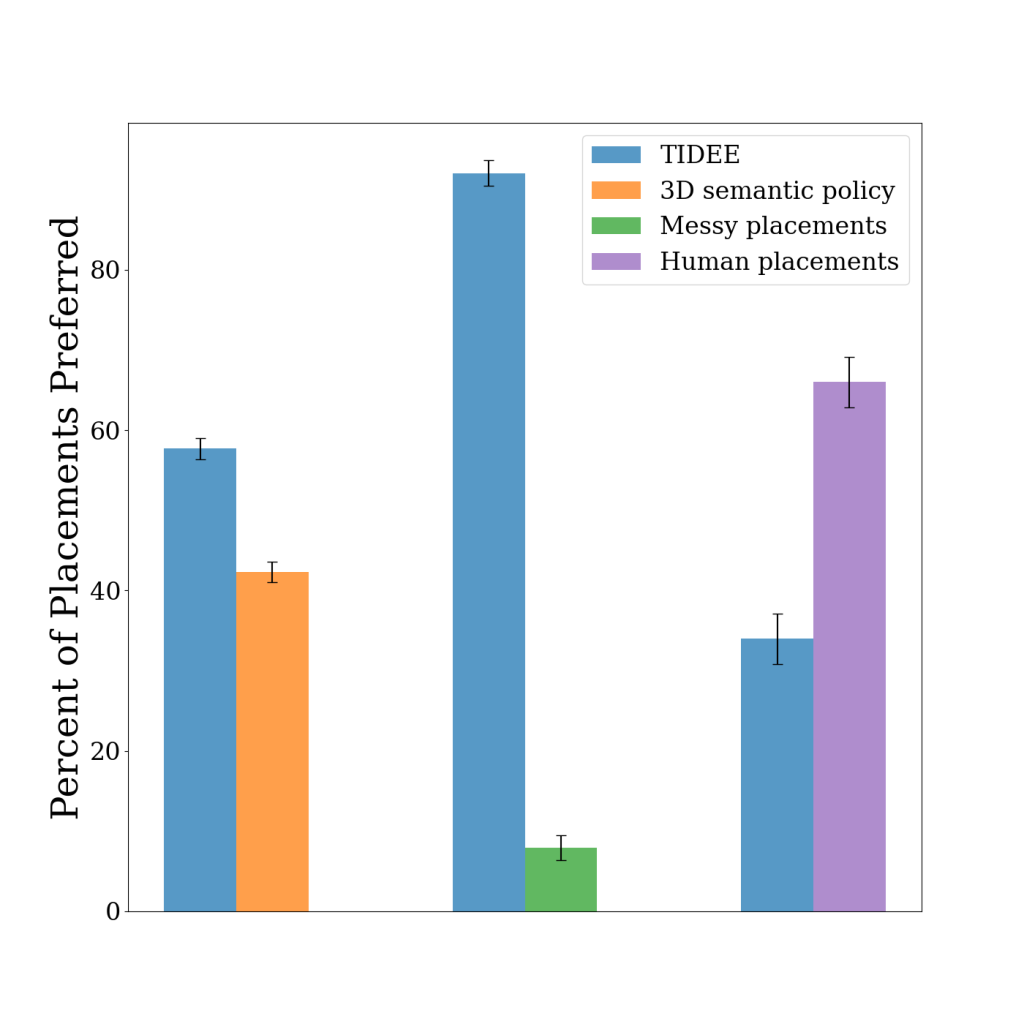

Applying a established of messy take a look at scenes that TIDEE has never ever observed just before, we undertaking our agent with reconfiguring the messy space to a tidy state. Considering the fact that a solitary object may well be tidy in numerous areas in a scene, we consider our technique by inquiring people whether they desire the placements of TIDEE as opposed to baseline placements that do not make use of 1 or a lot more of TIDEE’s commonsense priors. Under we demonstrate that TIDEE placements are considerably favored to the baseline placements, and even aggressive with human placements (previous row).

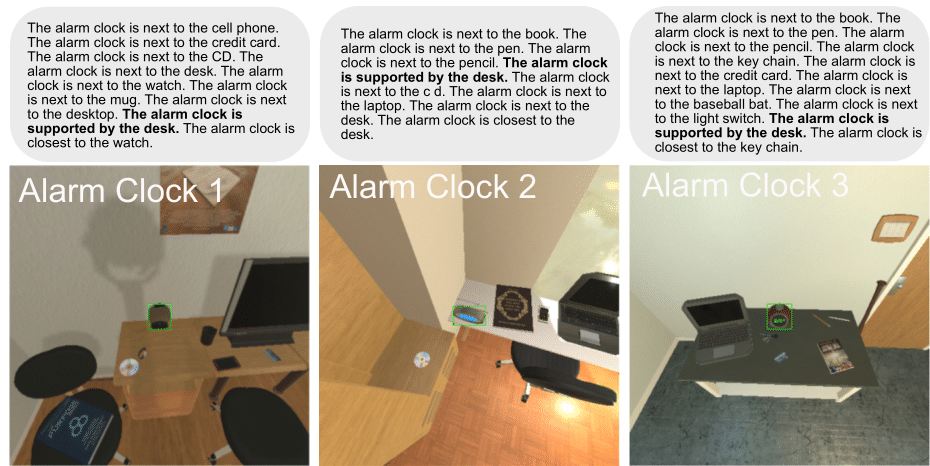

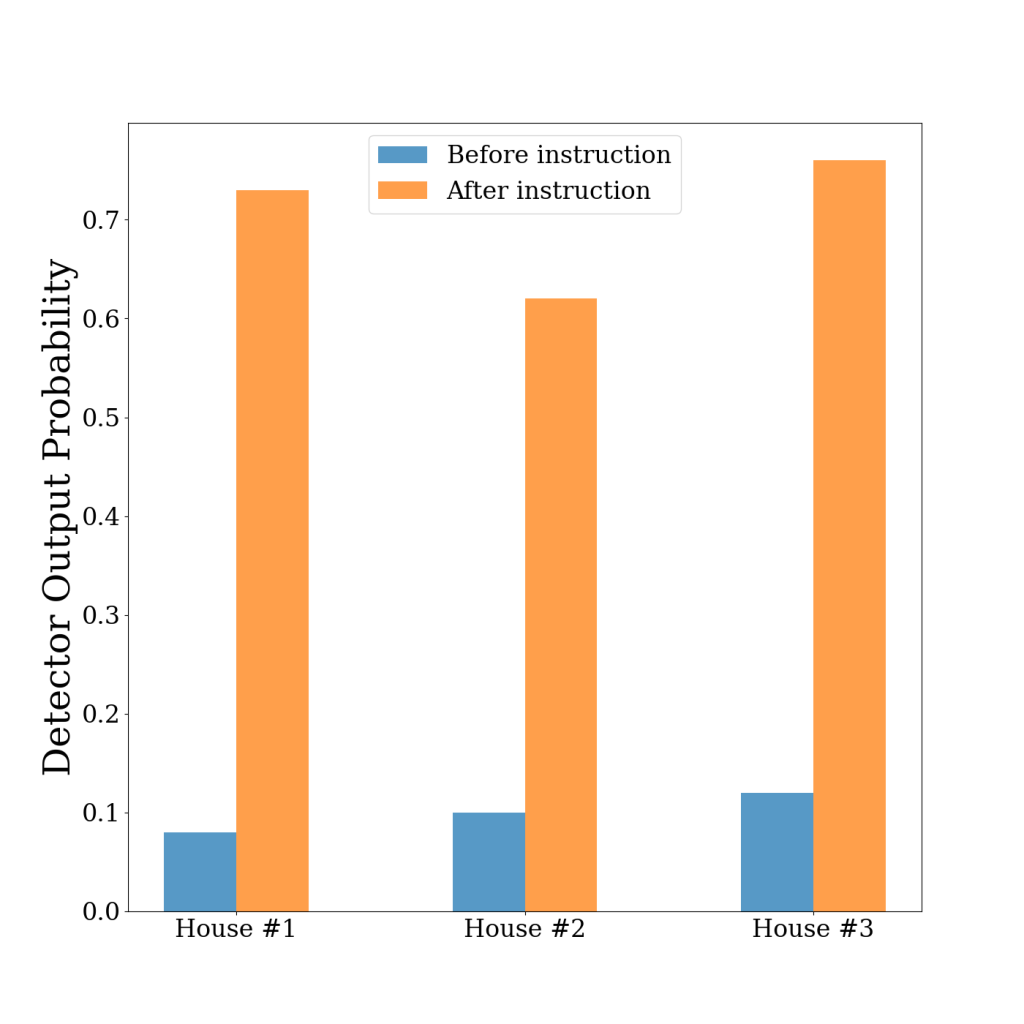

We on top of that display that the placements of TIDEE can be custom-made centered on user preferences. For instance, based mostly on user enter this kind of as “I under no circumstances want my alarm on the desk”, we can use on line finding out approaches to improve the output from the design that alarm clock staying on the desk is out of area (and should really be moved). Under we demonstrate some illustrations of places and relations of alarm clocks that had been predicted as being in the accurate locations (and not out of put) in the scene right after our initial coaching. On the other hand, just after doing the person-specified finetuning, our network predicts that the alarm clock on the desk is out of position and need to be repositioned.

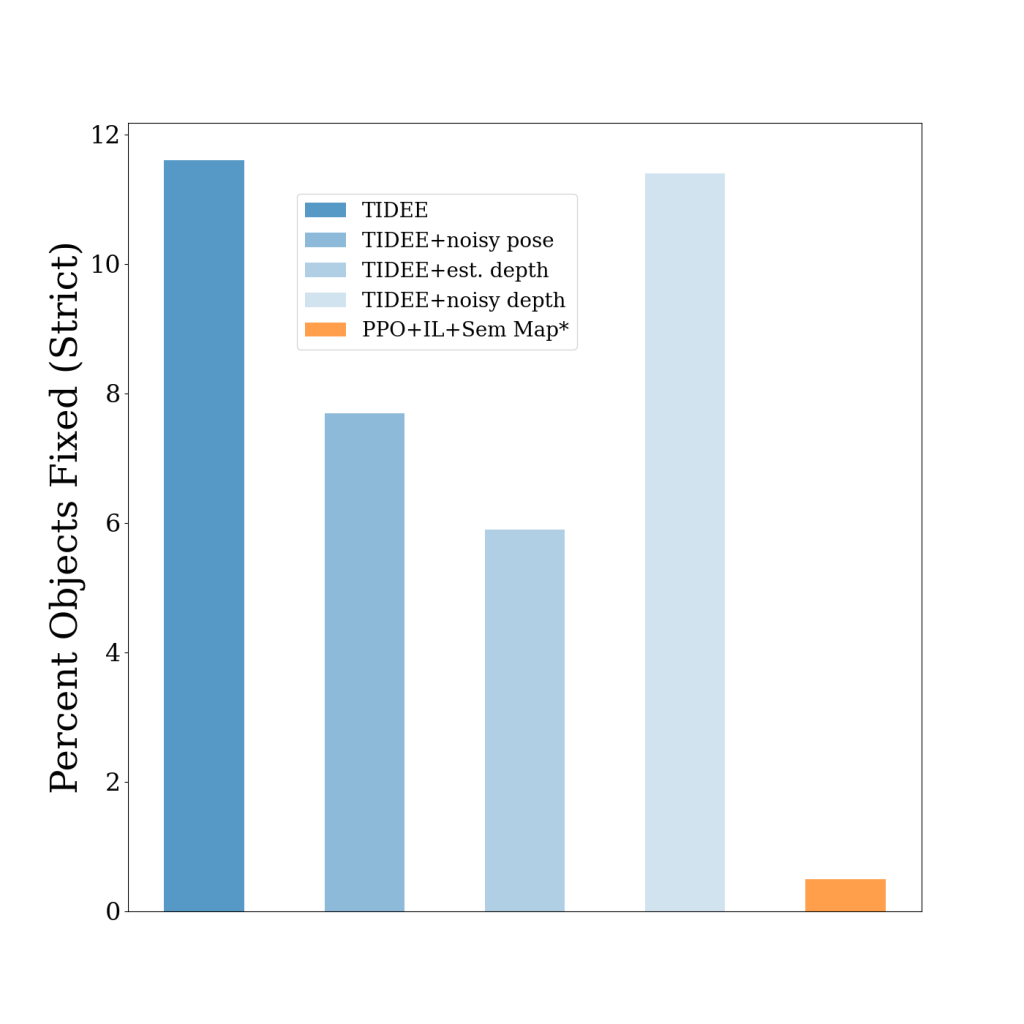

We also display that a simplified model of TIDEE can generalize to undertaking of rearrangement, exactly where the agent sees the primary condition of the objects, then some of the objects get rearranged to new destinations, and the agent should rearrange the objects again to their unique condition. We outperform the preceding state of the art model that utilizes semantic mapping and reinforcement finding out, even with noisy sensor measurements.

Summary

In this short article, we mentioned TIDEE, an embodied agent that makes use of commonsense reasoning to tidy up novel messy scenes. We introduce a new benchmark to examination brokers in their ability to cleanse up messy scenes devoid of any human instruction. To check out out our paper, code, and much more, please check out our site at https://tidee-agent.github.io/.

Also, experience totally free to shoot me an e mail at [email protected]! I would like to chat!

[ad_2]

Source url