[ad_1]

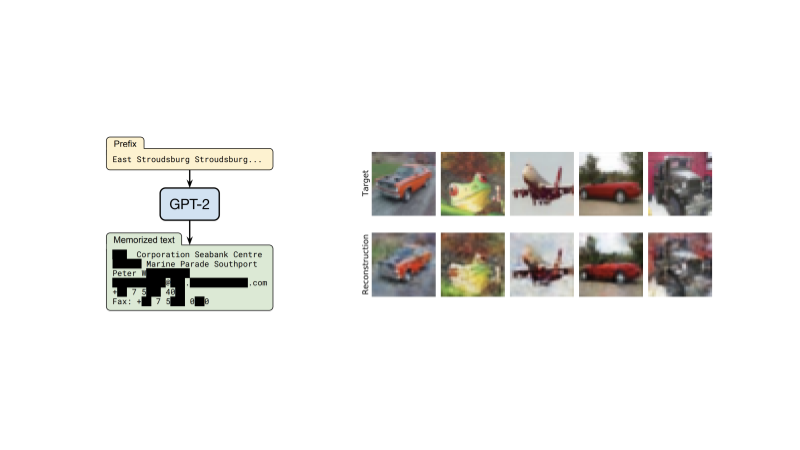

A the latest DeepMind paper on the ethical and social challenges of language versions discovered large language designs leaking delicate info about their coaching knowledge as a possible risk that organisations doing work on these models have the duty to tackle. Yet another new paper demonstrates that equivalent privacy threats can also occur in conventional image classification versions: a fingerprint of each and every personal training graphic can be located embedded in the design parameters, and destructive parties could exploit these types of fingerprints to reconstruct the coaching knowledge from the model.

Privateness-enhancing technologies like differential privacy (DP) can be deployed at instruction time to mitigate these dangers, but they usually incur sizeable reduction in model functionality. In this operate, we make substantial progress toward unlocking substantial-accuracy schooling of impression classification types underneath differential privateness.

Differential privacy was proposed as a mathematical framework to seize the need of guarding personal data in the study course of statistical information evaluation (like the schooling of machine studying versions). DP algorithms protect persons from any inferences about the functions that make them exceptional (such as entire or partial reconstruction) by injecting very carefully calibrated noise during the computation of the preferred statistic or design. Using DP algorithms offers strong and arduous privacy ensures both equally in theory and in apply, and has turn into a de-facto gold typical adopted by a range of general public and private organisations.

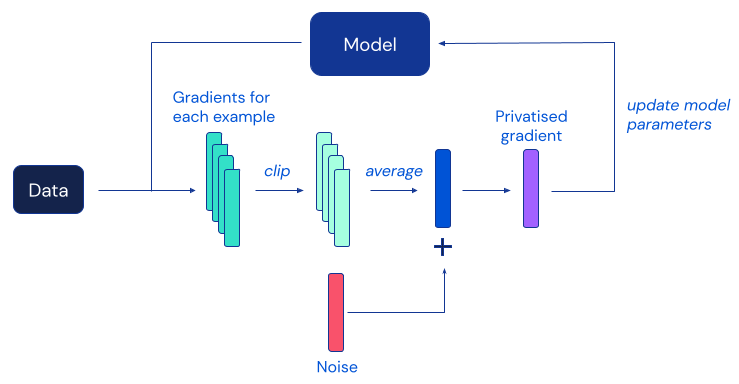

The most common DP algorithm for deep learning is differentially personal stochastic gradient descent (DP-SGD), a modification of normal SGD acquired by clipping gradients of unique illustrations and incorporating ample noise to mask the contribution of any specific to each model update:

Sadly, prior performs have located that in apply, the privateness security presented by DP-SGD typically comes at the cost of drastically significantly less exact products, which provides a big obstacle to the popular adoption of differential privateness in the machine understanding neighborhood. In accordance to empirical evidence from prior works, this utility degradation in DP-SGD turns into extra critical on larger neural network styles – together with the ones regularly used to attain the most effective overall performance on complicated picture classification benchmarks.

Our function investigates this phenomenon and proposes a series of very simple modifications to each the training method and product architecture, yielding a considerable enhancement on the precision of DP education on typical picture classification benchmarks. The most putting observation coming out of our investigate is that DP-SGD can be used to effectively practice considerably further models than formerly believed, as lengthy as a single assures the model’s gradients are perfectly-behaved. We believe the significant leap in efficiency obtained by our investigation has the potential to unlock realistic purposes of impression classification models experienced with official privateness guarantees.

The figure beneath summarises two of our key effects: an ~10% improvement on CIFAR-10 in contrast to former operate when privately coaching without the need of further knowledge, and a best-1 precision of 86.7% on ImageNet when privately high-quality-tuning a design pre-qualified on a various dataset, virtually closing the gap with the ideal non-private overall performance.

These success are realized at 𝜺=8, a regular location for calibrating the toughness of the protection offered by differential privateness in equipment studying purposes. We refer to the paper for a discussion of this parameter, as nicely as more experimental results at other values of 𝜺 and also on other datasets. Jointly with the paper, we are also open-sourcing our implementation to enable other scientists to confirm our findings and create on them. We hope this contribution will aid other people interested in building useful DP training a actuality.

Obtain our JAX implementation on GitHub.

[ad_2]

Resource backlink